If you need public access:

https://github.com/anderspitman/awesome-tunneling

From this list, I use rathole. One rathole container runs on my vps, and another runs on my home server, and it exposes my reverse proxy (caddy), to the public.

If you need public access:

https://github.com/anderspitman/awesome-tunneling

From this list, I use rathole. One rathole container runs on my vps, and another runs on my home server, and it exposes my reverse proxy (caddy), to the public.

https://nixlang.wiki/en/tricks/distrobox

Not the nix way, but when you really need something to work, you can create containers of other distros.

From what I've heard, true multiseat is very to configure. You probably also want to investigate using GPU accelerated containers, because it's legitimately easier to share the same GPU across multiple containers as opposed to multiple seats.

Wezterm. I started out on konsole, and was happy with it, but then I started using zellij as my terminal multiplexer. Although zellij allows you to configure what command copies and pastes text, copy/paste on wayland and windows only works by default with wezterm. It gives me consistency across multiple DEs/OSes, with minimal configuration, which is good because I was setting up development environments for many people, with many configurations

I just use VM's for that. I recently learned that Vagrant, an infra as code for virtual machines designed for creating testing VMs, creates an Ansible Inventory that you can run your playbooks at.

Okay. A little context is needed.

Qemu is just the emulator used. For the most part, it cannot handle networking.

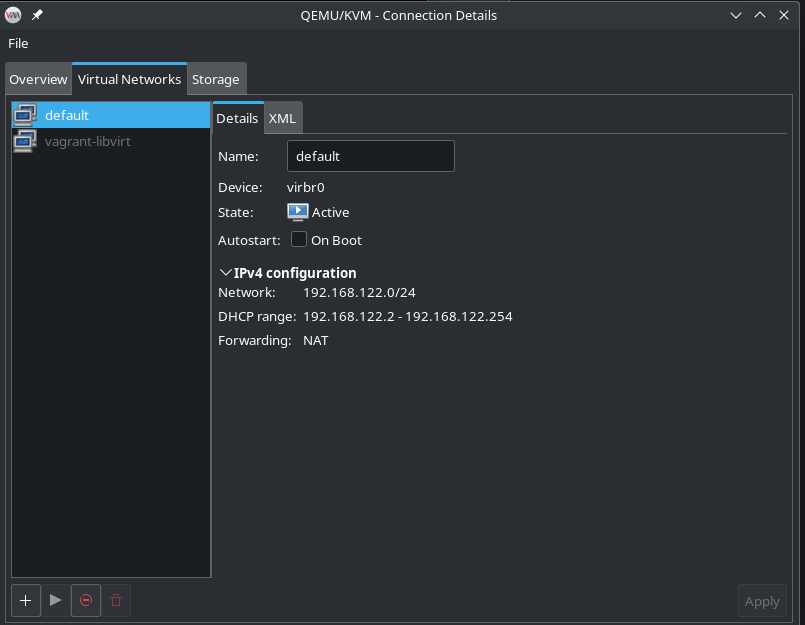

Libvirt, is a system daemon, that handles running qemu and whatnot, and also configuring networking. Virt-manager, interacts with this libvirt daemon.

Now, by default, libvirt creates a bridge for all virtual machines to use, to create a simple one-to-many NAT.

The above is looking at the bridge on my laptop*. However, each virtual network has to be started.

Then, when you create a virtual machine, it should automatically select the bridge interface, and no further configuration should be required...

However, that's just NAT. But configuring the other virtual networking types should also be doable from the virt-manager as well, and I find it kinda weird that you are going to NetworkManager for this. The default is NAT, but

By "multiple local ips", do you mean something like how virtual machines share a physical device (possibly with the host?), and get their own ip address on the same network as the host?

Macvtap is probably the easier way to do that, just select macvtap and the physical device you want to attatch your virtual machine to, however, there are caveats with host-guest network communication.

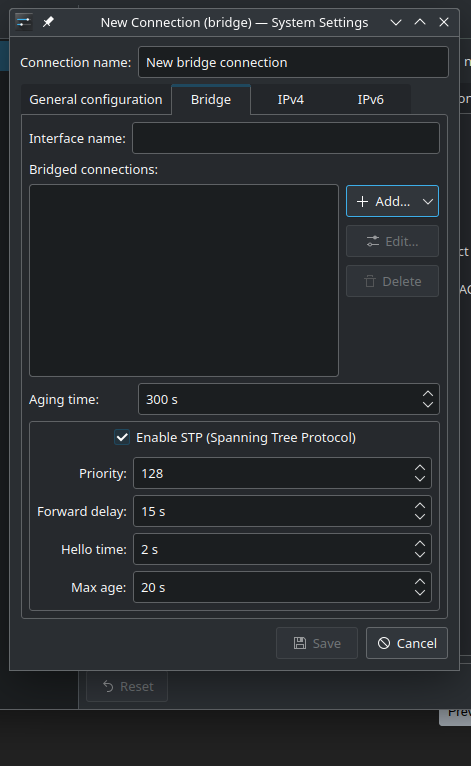

Bridged is more complex, especially when you only have one physical ethernet port. Converting an ethernet interface to a bridge usually also prevents it from being used as a normal ethernet port.

The easier, more reliable, tested method requires two ethernet interfaces, both plugged in and connect.

Create a bridge in Networkmanager, and select the non-host interface, as a bridge:

There is a less reliable way if you only have one ethernet interface: if you have only one port, you can convert it to a usable network interface. Although you can do this by hand in NetworkManage (or whatever network management service you are using), there is a much easier way:

After that, cockpit will automatically configure bridge to be a usable internet interface as well, and then you can select that bridge as your bridge in virt-manager....

Except I had some issues with nested bridging on kernels older than 6.0, so obviously setup adds extra complexity.

Anyway, I highly recommend you access cockpit remotely, rather than over RDP, if you are going to do this, so that cockpit can properly test the network connection (it automatically reverts changes within a time period if they break connectivity).

I also recommend reading: https://jamielinux.com/docs/libvirt-networking-handbook/ for an understanding of libvirt networking, and which

*okay, maybe inline images are kinda nice. I thought they were annoying at first but they obviously have utility.

Provision Management Software

Openstack skyline/horizon

Compute

Openstack nova

And so on. Openstack is also many, many components, that can be pieced together for your own cloud computing platform.

Although it won't have the sheer number of services AWS has, many of them are redundant.

The core services I expect to see done first: compute, networking, storage (+ image storage), and a web UI/API

Next: S3 storage, Kubernetes as a service, and then either Databases as a service or containers as a service.

But you are right, many of the services that AWS offers are highly specialized (robotics, space communication), and people get locked in, and I don't really expect to see those.

AWS is software. Just not something you can self host.

There already exist alternatives to AWS, like localstack, a local AWS for testing purposes, or the more mature openstack, which is designed for essentially running your own AWS at scale.

You cannot run a GUI in LXC

It's probably possible, especially considering lxc can run systemd nowadays, and I can find many sources on this, for GUI and for GPU acceleration (but not in proxmox):

https://stgraber.org/2017/03/21/cuda-in-lxd/

And then there are also technologies like KasmVNC which can serve a GUI as a website, and it doesn't need a GPU at all.

EDIT: Two year old guide, but a redditor pulled it off

The new mars helicoptor, ingenuity, runs linux.

https://www.theverge.com/2021/2/19/22291324/linux-perseverance-mars-curiosity-ingenuity

Their solution is to hold two copies of memory and double check operations as much as possible, and if any difference is detected they simply reboot. Ingenuity will start to fall out of the sky, but it can go through a full reboot and come back online in a few hundred milliseconds to continue flying.

https://news.ycombinator.com/item?id=26181763

Dunno if future one's will run linux though, since this is just an experiment.

I'm not too well versed in rustdesk, but it seems that they use end to end encryption (is it good? Idk).

https://github.com/rustdesk/rustdesk/discussions/2239#discussioncomment-5647075

I have experience with a similar software that uses relays, syncthing. With syncthing, everything is e2ee, so there's no concern about whether or not the relay's are trustworthy, and you can even host your own public relay server.

I find it hard to believe that rustdesk, another relay based software, wouldn't have a similar architecture.

edit: typo

My problem with this, is that when running a public facing server, this ends up with people exposing containers that really, really shouldn't be exposed.

Excerpt from another comment of mine:

It’s only docker where you have to deal with something like this:

Originally from here, edited for brevity.

Resulting in exposed services. Feel free to look at shodan or zoomeye, internet connected search engines, for exposed versions of this service. This service is highly dangerous to expose, as it gives people an in to your system via the docker socket.