Has anyone else been called crazy for home-labbing front facing stuff?

I've always had this mindset of asking, "What am I really getting out of this?" But when it came to the internet and what I posted, I held onto a bit of innocence. Over the past two years, though, that innocence has been chipped away, but I think I’ve managed to reclaim it.

I don’t fault for-profit companies like Reddit for monetizing content; honestly, it was my own oversight for not reading the terms of service carefully. But since then, I’ve realized just how much I’ve unknowingly contributed to other projects for free.

There’s nothing inherently wrong with that, but does anyone else ever feel a bit... exploited?

It’s like when a recruiter asks for a .docx version of your resume instead of the .pdf I provide. Maybe it’s just to block your contact details, or maybe there’s something more dubious at play. I’ve experienced both, and each time, I’ve ended up feeling a bit... used.

Now, when a recruiter asks for a .docx , I ask them why. If it’s to hide contact details, I send an anonymized version. If they want to trim it down to two pages, I direct them to the summary section on my professional website. And if they want to add their bits to it, I guide them to my website, where they can explore my detailed posts.

For me, it’s about reclaiming control over what I’ve shared.

I was talking to someone about this recently, and they mentioned that they like to post everything on GitLab to showcase what they’ve been working on. But honestly, it’s just not the same as self-hosting your own Gitea or GitLab instance. But this guy thought I was crazy for hosting a single instance GitLab.

Okay so take X, for example. There, could have a super locked-down account like I do here, only contributing to communities when I want to by directly tagging them, but otherwise just using it as a personal journal like my Mastodon, but it’s just not the same. When X started monetizing posts, the platform's objective changed.

I don’t mind 'for-profit,' but when it’s driven by short-term gains like a monetized post, eventually all engagement is funneled towards that. It ends up feeling like you’re writing in someone else’s diary. That you tailor for engagement.

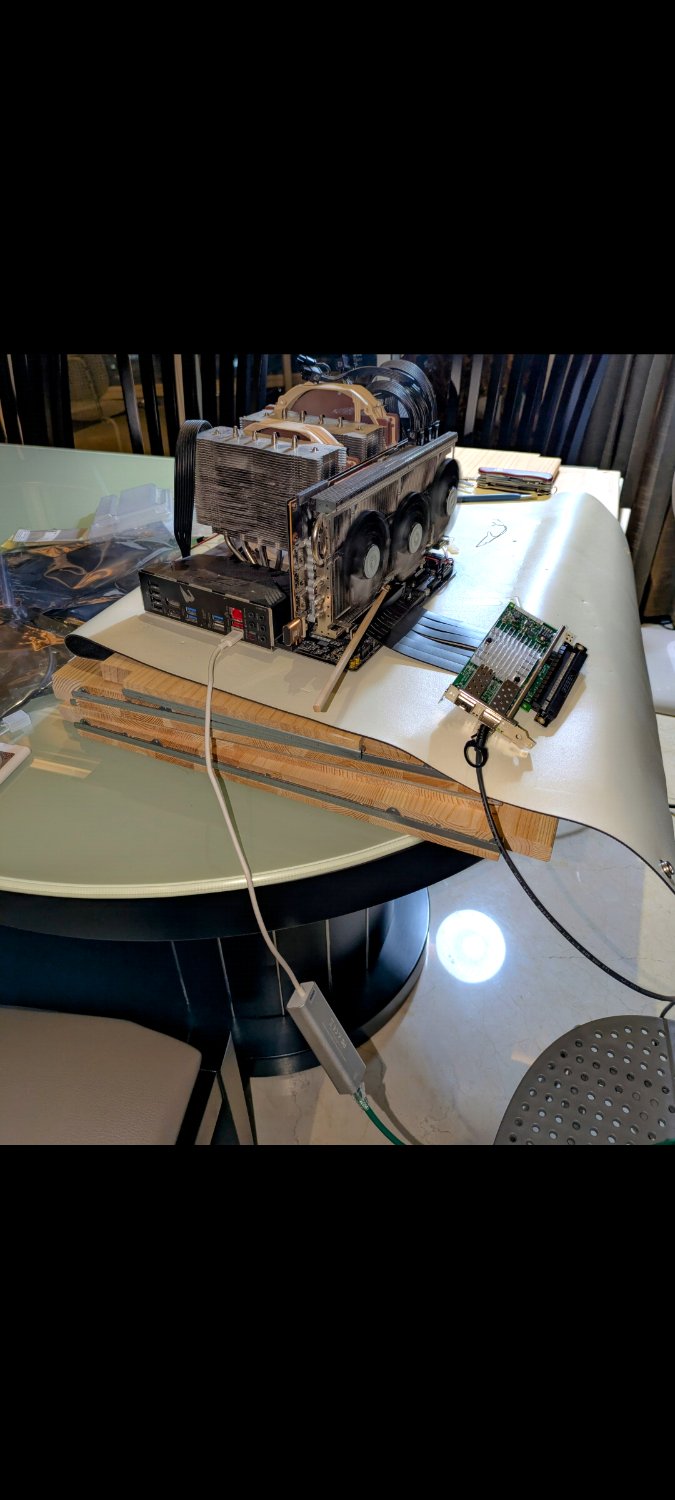

It’s also about the love of tinkering.. breaking things, fixing them, and getting everything back up to spec. It’s about embracing the original idea of the internet: a decentralized space where anyone can contribute, without your work being exploited.

It’s your own little corner where you can post whatever you want, for whomever you want. A Jellyfin server for my partner, a portfolio for the hiring manager, a GitLab for my playground. Enjoying the freedom to experiment without an ops exec pulling their hair out.

It's kinda magical.

Footnote: This is my first post to this community, if this post isn't a good fit, please let me know and I'll gladly adjust or remove it.

Tags for Federation: @homelab

#homelab #macroblog