this post was submitted on 16 Oct 2024

64 points (97.1% liked)

Fediverse

37404 readers

347 users here now

A community to talk about the Fediverse and all it's related services using ActivityPub (Mastodon, Lemmy, KBin, etc).

If you wanted to get help with moderating your own community then head over to !moderators@lemmy.world!

Rules

- Posts must be on topic.

- Be respectful of others.

- Cite the sources used for graphs and other statistics.

- Follow the general Lemmy.world rules.

Learn more at these websites: Join The Fediverse Wiki, Fediverse.info, Wikipedia Page, The Federation Info (Stats), FediDB (Stats), Sub Rehab (Reddit Migration)

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

☑ Clear label for bot accounts

☑ 3 different levels of captcha verification (I use the intermediary level in my instance and rarely deal with any bot)

Profiling algorithms seems like something people are running away when they choose fediverse platforms, this kind of solution have to be very well thought and communicated.

☑ Reporting in lemmy is just as easy as anywhere else.

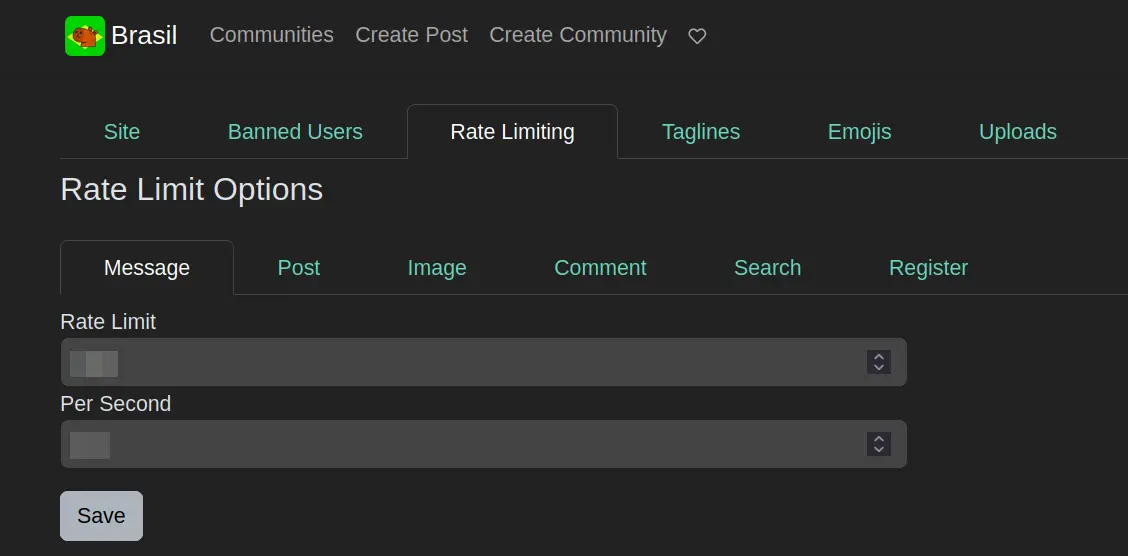

☑ Like this?

image

What do you suggest other than profiling accounts?

This is not up to Lemmy development team.

Idem.

Mhm, I love dismissive "Look, it already works, and there's nothing to improve" comments.

Lemmy lacks significant capabilities to effectively handle the bots from 10+ years ago. Nevermind bots today.

The controls which are implemented are implemented based off of "classic" bot concerns from nearly a decade ago. And even then, they're shallow, and only "kind of" effective. They wouldn't be considered effective for a social media platform in 2014, they definitely are not anywhere near capability today.