According to the documents, Cellebrite could not unlock any iPhones running iOS 17.4 or newer as of April 2024, labeling them as “In Research.” For iOS versions 17.1 to 17.3.1, the company could unlock the iPhone XR and iPhone 11 series using their “Supersonic BF” (brute force) capability. However, iPhone 12 and newer models running these iOS versions were listed as “Coming soon.”

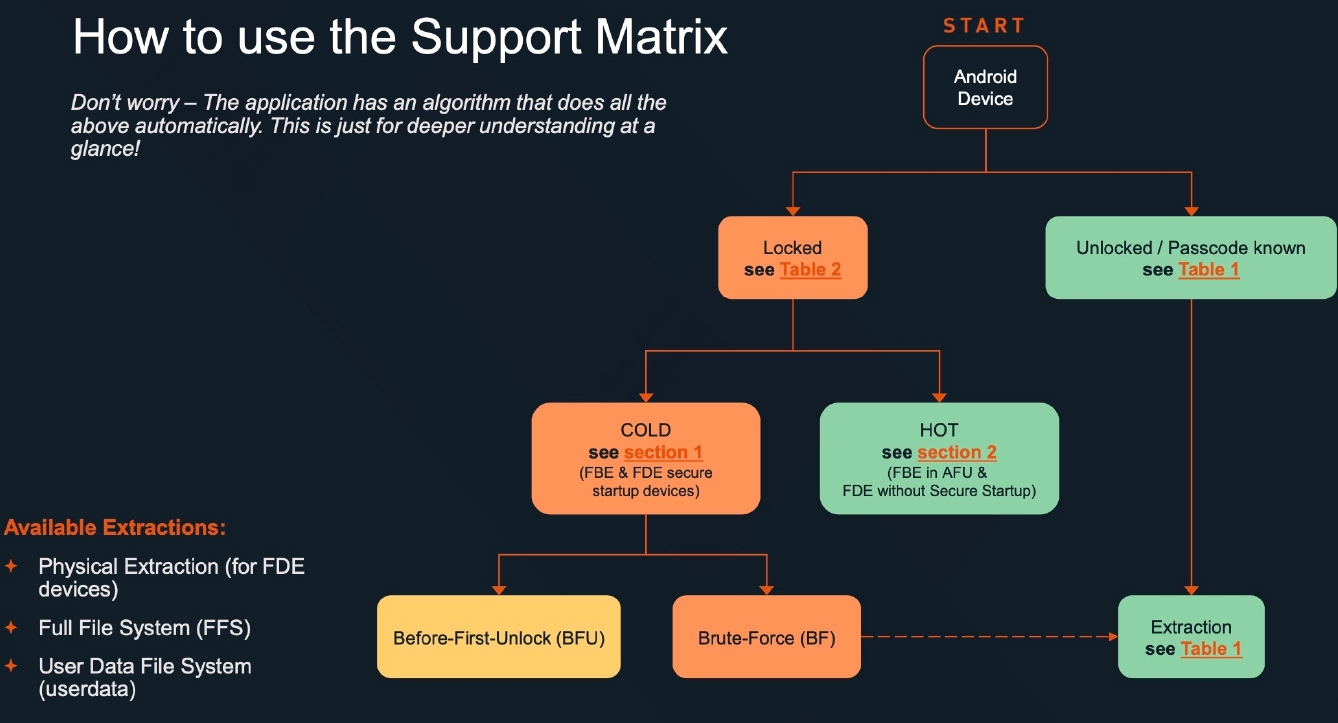

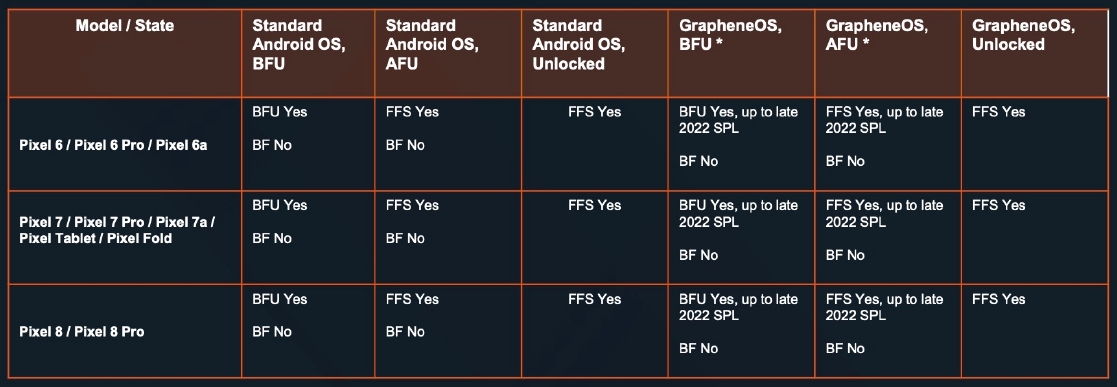

The Android support matrix showed broader coverage for locked Android devices, though some limitations remained. Notably, Cellebrite could not brute force Google Pixel 6, 7, or 8 devices that had been powered off. The document also specifically mentioned GrapheneOS, a privacy-focused Android variant reportedly gaining popularity among security-conscious users.

Links to the docs:

GrapheneOS has a thread about this on Mastodon, which adds a bit more detail:

Cellebrite was a few months behind on supporting the latest iOS versions. It's common for them to fall a few months behind for the latest iOS and quarterly/yearly Android releases. They've had April, May, June and July to advance further. It's wrong to assume it didn't change.

404media published an article about the leaked documentation this week but it doesn't go into depth analyzing the leaked information as we did, but it didn't make any major errors. Many news publications are now writing highly inaccurate articles about it following that coverage.

The detailed Android table showing the same info as iPhones for Pixels wasn't included in the article. Other news publications appear to be ignoring the leaked docs and our thread linked by 404media with more detail. They're only paraphrasing that article and making assumptions.

We received Cellebrite's April 2024 Android and iOS support documents in April and from another source in May before publishing it. Someone else shared those and more documents on our forum. It didn't help us improve GrapheneOS, but it's good to know what we're doing is working.

It would be a lot more helpful if people leaked the current code for Cellebrite, Graykey and XRY to us. We'll report all of the Android vulnerabilities they use whether or not they can be used against GrapheneOS. We can also make suggestions on how to fix vulnerability classes.

In April, Pixels added a reset attack mitigation feature based on our proposal ruling out the class of vulnerability being used by XRY.

In June, Pixels added support for wipe-without-reboot based on our proposal to prevent device admin app wiping bypass being used by XRY.

In Cellebrite's docs, they show they can extract the iOS lock method from memory on an After First Unlock device after exploiting it, so the opt-in data classes for keeping data at rest when locked don't really work. XRY used a similar issue in their now blocked Android exploit.

GrapheneOS zero-on-free features appear to stop that data from being kept around after unlock. However, it would be nice to know what's being kept around. It's not the password since they have to brute force so it must be the initial scrypt-derived key or one of the hashes of it.