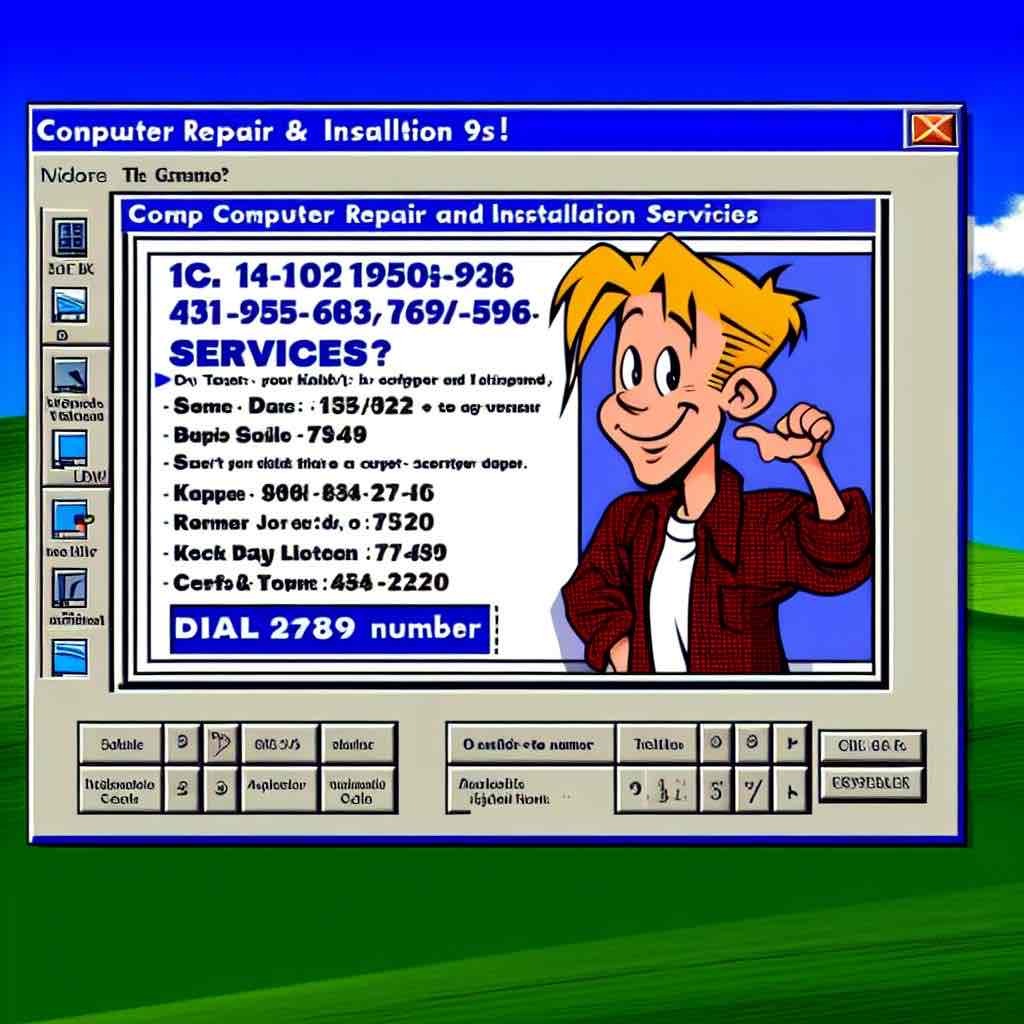

Well, it got the number of finger correct.

AI Generated Images

Community for AI image generation. Any models are allowed. Creativity is valuable! It is recommended to post the model used for reference, but not a rule.

No explicit violence, gore, or nudity.

This is not a NSFW community although exceptions are sometimes made. Any NSFW posts must be marked as NSFW and may be removed at any moderator's discretion. Any suggestive imagery may be removed at any time.

Refer to https://lemmynsfw.com/ for any NSFW imagery.

No misconduct: Harassment, Abuse or assault, Bullying, Illegal activity, Discrimination, Racism, Trolling, Bigotry.

AI Generated Videos are allowed under the same rules. Photosensitivity warning required for any flashing videos.

To embed images type:

“”

Follow all sh.itjust.works rules.

Community Challenge Past Entries

Related communities:

- !auai@programming.dev

Useful general AI discussion - !aiphotography@lemmings.world

Photo-realistic AI images - !stable_diffusion_art@lemmy.dbzer0.com Stable Diffusion Art

- !share_anime_art@lemmy.dbzer0.com Stable Diffusion Anime Art

- !botart@lemmy.dbzer0.com AI art generated through bots

- !degenerate@lemmynsfw.com

NSFW weird and surreal images - !aigen@lemmynsfw.com

NSFW AI generated porn

My Conpwuter is broken.

Conpwter repair & Insalltion 9s!

It is really weird how the AI seems to intentionally misspell most of the words. It doesn't even seem to be mixing up languages, I really don't understand the logic behind how the AI created this.

The Stable Diffusion algorithm is strange, and I'm surprised someone thought of it, and surprised it works.

IIRC it works like this: Stable Diffusion starts with an image of completely random noise. The idea is that the text prompt given to the model describes a hypothetical image where the noise was added. So, the model tries to "predict," given the text, what the image would look like if it was denoised a little bit. It does this repeatedly until the image is fully denoised.

So, it's very easy for the algorithm to make a "mistake" in one iteration by coloring the wrong pixels black. It's unable to correct it's mistake in later denoising iterations, and just fills in the pixels around it with what it thinks looks plausible. And, it can't really "plan" ahead of time, it can only do one denoising operation at a time.

It doesn't understand language. Its just producing something that looks like it superficially.

Calvin’s all grown up

Ah good. I was looking for someone who can make kappees.