Actually, as a web guy, I find the ARM architecture to be more than sufficient. Most of the stuff I build is memory heavy and CPU light, so the Pi is great for this stuff.

danielquinn

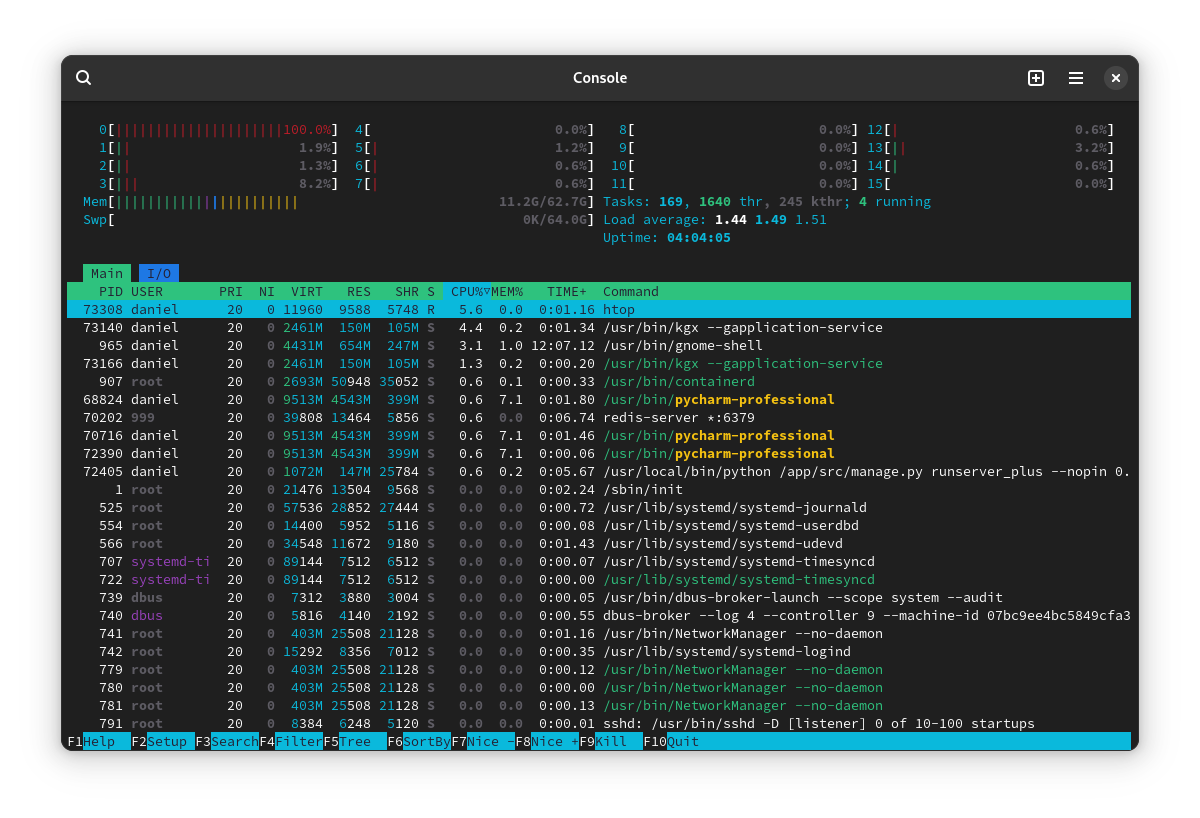

They're fanless and low-power, which was the primary draw to going this route. I run a Kubernetes cluster on them, including a few personal websites (Nginx+Python+Django), PostgreSQL, Sonarr, Calibre, SSH (occasionally) and every once in a while, an OpenArena server :-)

Seven Raspberry Pi 4's and one Pi Zero, mounted on some tile "shelves" inside some IKEA furniture.

Monolith has the same problem here. I think the best resolution might be some sort of browser-plugin based solution where you could say "archive this" and have it push the result somewhere.

I wonder if I could combine a dumb plugin with Monolith to do that... A weekend project perhaps.

Monolith can be particularly handy for this. I used it in a recent project to archive the outgoing links from my own site. Coincidentally, if anyone is interested in that, it's called django-cool-urls.

ExFAT is good for portable devices, but if you're working with something internally, there's no reason not to use EXT4 or NTFS.

That's not been my experience. Lots of drives I've bought have been FAT32 out of the box.

- Keep everything in an external git service. You can use third party services like Codeberg, GitLab, or GitHub, or host your own on your NAS.

- When you're not working on a project and don't think you'll need to reference it for a while, just delete it from your laptop. The code always lives in git anyway.

In terms of local storage, I usually have everything in ~/projects/project-name, and I don't have tiny file size limits because I don't use FAT32 filesystems — that's the default filesystem you usually get on USB sticks and external hard drives you buy. You have to format those drives to something like EXT4 (Linux) or NTFS (Windows) or you get stuck with FAT32 which has 2gb file sizes.

You probably want to look into Health Checks. I believe you can tell Docker to "start service B when service A is healthy", so you can define your health check with a script that depends on Tailscale functioning.

So my first impression is that the requirement to copy-paste that elaborate SQL to get the schema is clever but not sufficiently intuitive. Rather than saying "Run this query and paste the output", you say "Run this script in your database" and print out a bunch of text that is not a query at all but a one-liner Bash script that relies on the existence of pbcopy -- something that (a) doesn't exist on many default installs (b) is a red flag for something that's meant to be self-hosted (why am I talking to a pasteboard?), and (c) is totally unnecessary anyway.

Instead, you could just say: "Run this query and paste the result in this box" and print out the raw SQL only. Leave it up to the user to figure out how they want to run it.

Alternatively you can also do something like: "Run this on your machine and copy/paste the output":

$ curl 'https://app.chartdb.io/superquery.sql' | psql --user USERNAME --host HOSTNAME DBNAME

In the case of the cloud service, it's also not clear if the data is being stored on the server or client side in LocalStorage. I would think that the latter would be preferable.

I had no idea! Thanks for the tip.

Each Pi 4 has 8GB of RAM. With six devices, that's 48GB to play with. More than enough for my needs.