this post was submitted on 10 Dec 2024

740 points (97.8% liked)

Games

34499 readers

383 users here now

Welcome to the largest gaming community on Lemmy! Discussion for all kinds of games. Video games, tabletop games, card games etc.

Weekly Threads:

Rules:

-

Submissions have to be related to games

-

No bigotry or harassment, be civil

-

No excessive self-promotion

-

Stay on-topic; no memes, funny videos, giveaways, reposts, or low-effort posts

-

Mark Spoilers and NSFW

-

No linking to piracy

More information about the community rules can be found here.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

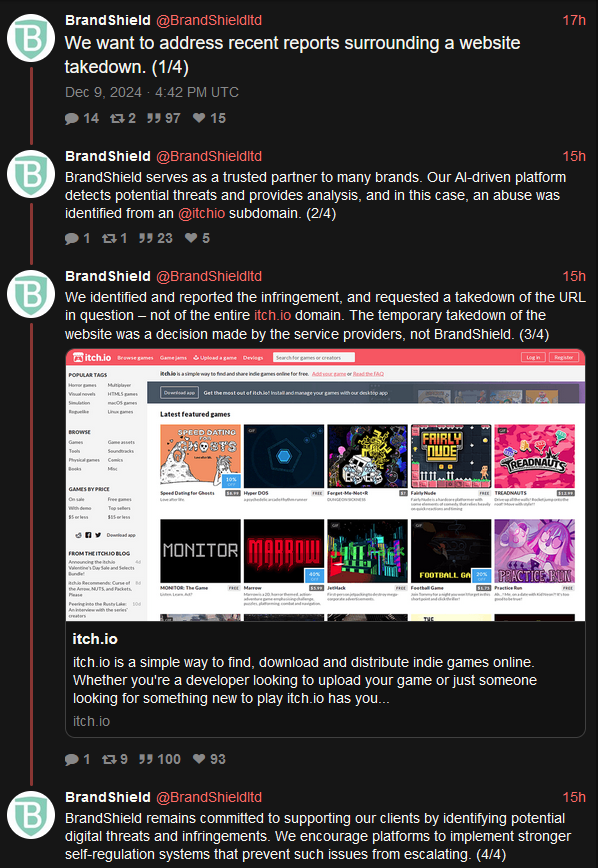

You can't create an automated machine, let it run loose without supervision and then claim to not be responsible for what the machine does.

Maybe just maybe this was the very first instance of their ai malfunctioning (which I don't believe for a second), in which case the correct response of Brandshield would have been to announce that they would temporarily suspend the activities of this particular program & promise to implement improvements so that it would not happen again. Brandshield has done neither of these, which tells me that it's not the first time and also that Brandshield has no intention of preventing it from happening again in the future.

I'm not trying to exonerate them of any blame, I'm just saying "knowingly" implies a human looking at something and making a decision as opposed to a machine making a mistake.

I made an automaton. I set the parameters in such a way that there is a large variability of actions that my automaton can take. My parameters do not pre-empt my automaton from taking certain illegal actions. I set my automaton loose. After some time it turns out that my automaton has taken an illegal action against a specific person. Did I know that my automaton was going to commit a illegal action against that specific person? No, I did not. Did I know that my automaton was sooner or later going to commit certain illegal actions? Yes I did, because those actions are within the parameters of the automaton. I know my automaton is capable of doing illegal actions and given enough incidences there is an absolute certainty that it will do those illegal actions. I do not need to interact with my automaton in any way to know that some of it's actions will be illegal.

And I'm not saying that you are. I tried to show with a parable that they do not need to see their machine's actions to know that some of it's actions are illegal. That's what we were disagreeing on: that they know.