Useful in the way that it increases emissions and hopefully leads to our demise because that's what we deserve for this stupid technology.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

Surely this is better than the crypto/NFT tech fad. At least there is some output from the generative AI that could be beneficial to the whole of humankind rather than lining a few people's pockets?

Unfortunately crypto is still somehow a thing. There is a couple year old bitcoin mining facility in my small town that brags about consuming 400MW of power to operate and they are solely owned by a Chinese company.

I recently noticed a number of bitcoin ATMs that have cropped up where I live - mostly at gas stations and the like. I am a little concerned by it.

While the consumption for AI train can be large, there are arguments to be made for its net effect in the long run.

The article's last section gives a few examples that are interesting to me from an environmental perspective. Using smaller problem-specific models can have a large effect in reducing AI emissions, since their relation to model size is not linear. AI assistance can indeed increase worker productivity, which does not necessarily decrease emissions but we have to keep in mind that our bodies are pretty inefficient meat bags. Last but not least, AI literacy can lead to better legislation and regulation.

The argument that our bodies are inefficient meat bags doesn't make sense. AI isn't replacing the inefficient meat bag unless I'm unaware of an AI killing people off and so far I've yet to see AI make any meaningful dent in overall emissions or research. A chatgpt query can use 10x more power than a regular Google search and there is no chance the result is 10x more useful. AI feels more like it's adding to the enshittification of the internet and because of its energy use the enshittification of our planet. IMO if these companies can't afford to build renewables to support their use then they can fuck off.

Theoretically we could slow down training and coast on fine-tuning existing models. Once the AI's trained they don't take that much energy to run.

Everyone was racing towards "bigger is better" because it worked up to GPT4, but word on the street is that raw training is giving diminishing returns so the massive spending on compute is just a waste now.

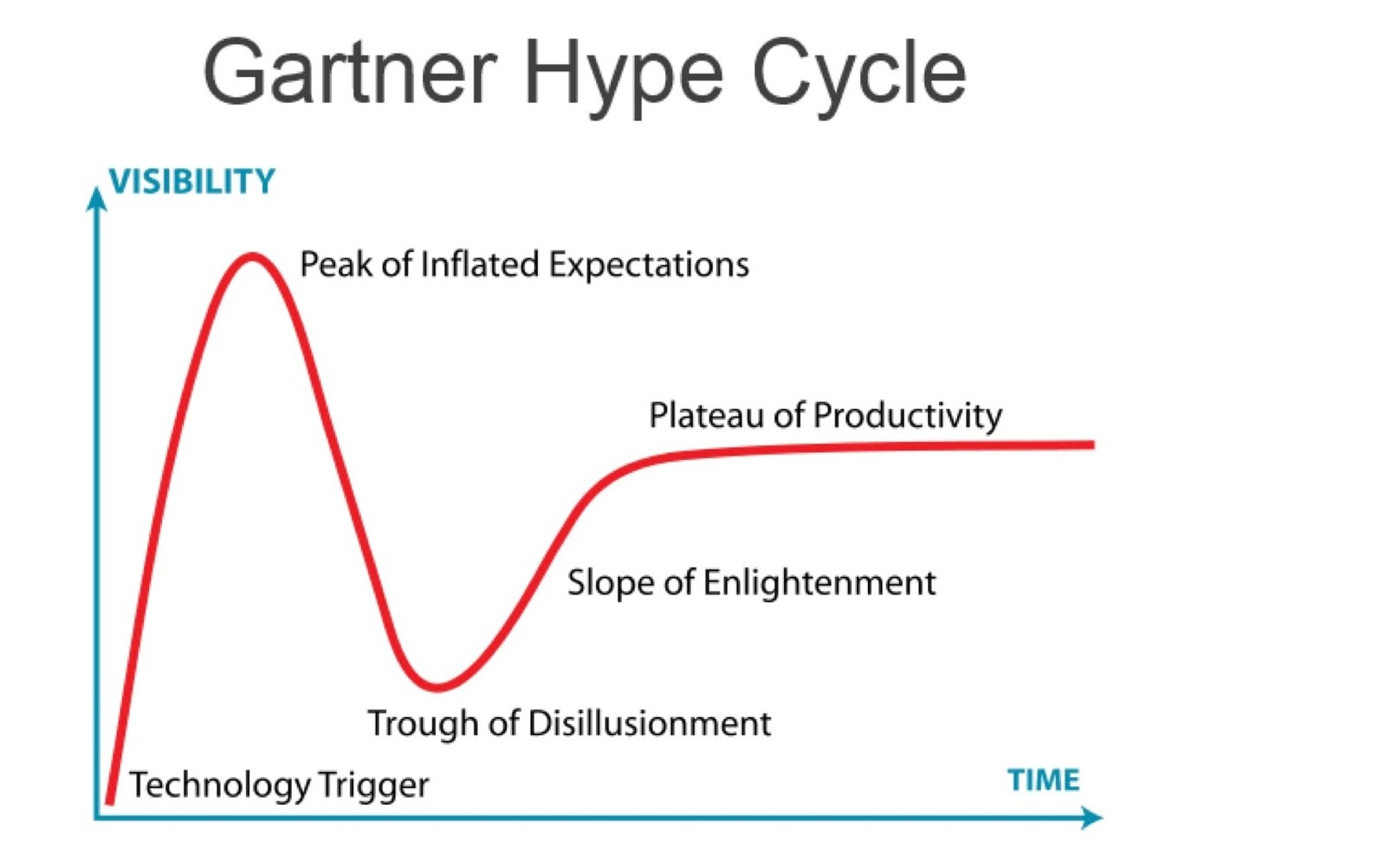

Is it me or is there something very facile and dull about Gartner charts? Thinking especially about the “””magic””” quadrants one (wow, you ranked competitors in some area along TWO axes!), but even this chart feels like such a mundane observation that it seems like frankly undeserved advertising for Gartner, again, given how little it actually says.

And it isn't even true in many cases. For example the internet with the dotcom bubble. It actually became much bigger and important than anyone anticipated in the 90s.

The graph for VR would also be quite interesting, given how many hype cycles it has had over the decades.

The trough of disillusionment sounds like my former depression. The slope of enlightenment sounds like a really frun water slide.

LLMs need to get better at saying "I don't know." I would rather an LLM admit that it doesn't know the answer instead of making up a bunch of bullshit and trying to convince me that it knows what it's talking about.

LLMs don't "know" anything. The true things they say are just as much bullshit as the falsehoods.

I work on LLM's for a big tech company. The misinformation on Lemmy is at best slightly disingenuous, and at worst people parroting falsehoods without knowing the facts. For that reason, take everything (even what I say) with a huge pinch of salt.

LLM's do NOT just parrot back falsehoods, otherwise the "best" model would just be the "best" data in the best fit. The best way to think about a LLM is as a huge conductor of data AND guiding expert services. The content is derived from trained data, but it will also hit hundreds of different services to get context, find real-time info, disambiguate, etc. A huge part of LLM work is getting your models to basically say "this feels right, but I need to find out more to be correct".

With that said, I think you're 100% right. Sadly, and I think I can speak for many companies here, knowing that you're right is hard to get right, and LLM's are probably right a lot in instances where the confidence in an answer is low. I would rather a LLM say "I can't verify this, but here is my best guess" or "here's a possible answer, let me go away and check".

I thought the tuning procedures, such as RLHF, kind of messes up the probabilities, so you can't really tell how confident the model is in the output (and I'm not sure how accurate these probabilities were in the first place)?

Also, it seems, at a certain point, the more context the models are given, the less accurate the output. A few times, I asked ChatGPT something, and it used its browsing functionality to look it up, and it was still wrong even though the sources were correct. But, when I disabled "browsing" so it would just use its internal model, it was correct.

It doesn't seem there are too many expert services tied to ChatGPT (I'm just using this as an example, because that's the one I use). There's obviously some kind of guardrail system for "safety," there's a search/browsing system (it shows you when it uses this), and there's a python interpreter. Of course, OpenAI is now very closed, so they may be hiding that it's using expert services (beyond the "experts" in the MOE model their speculated to be using).

I hate to break this to everyone who thinks that “AI” (LLM) is some sort of actual approximation of intelligence, but in reality, it’s just a fucking fancy ass parrot.

Our current “AI” doesn’t understand anything or have context, it’s just really good at guessing how to say what we want it to say… essentially in the same way that a parrot says “Polly wanna cracker.”

A parrot “talking to you” doesn’t know that Polly refers to itself or that a cracker is a specific type of food you are describing to it. If you were to ask it, “which hand was holding the cracker…?” it wouldn’t be able to answer the question… because it doesn’t fucking know what a hand is… or even the concept of playing a game or what a “question” even is.

It just knows that it makes it mouth, go “blah blah blah” in a very specific way, a human is more likely to give it a tasty treat… so it mushes its mouth parts around until its squawk becomes a sound that will elicit such a reward from the human in front of it… which is similar to how LLM “training models” work.

Oversimplification, but that’s basically it… a trillion-dollar power-grid-straining parrot.

And just like a parrot - the concept of “I don’t know” isn’t a thing it comprehends… because it’s a dumb fucking parrot.

The only thing the tech is good at… is mimicking.

It can “trace the lines” of any existing artist in history, and even blend their works, which is indeed how artists learn initially… but an LLM has nothing that can “inspire” it to create the art… because it’s just tracing the lines like a child would their favorite comic book character. That’s not art. It’s mimicry.

It can be used to transform your own voice to make you sound like most celebrities almost perfectly… it can make the mouth noises, but has no idea what it’s actually saying… like the parrot.

You get it?

To be fair, it is useful in some regards.

I'm not a huge fan of Amazon, but last time I had an issue with a parcel it was sorted out insanely fast by the AI assistant on the website.

Within literally 2 minutes I'd had a refund confirmed. No waiting for people to eventually pick up the phone after 40 minutes. No misunderstanding or annoying questions. The moment I pressed send on my message it instantly started formulating a reply.

The truncated version went:

"Hey I meant to get [x] delivery, but it hasn't arrived. Can I get a refund?"

"Sure, your money will go back into [y] account in a few days. If the parcel turns up in the meantime, you can send it back by dropping it off at [z]"

Done. Absolutely painless.

How is a chatbot here better, faster, or more accurate than just a "return this" button on a web page? Chat bots like that take 10x the programming effort and actively make the user experience worse.

Presumably there could be nuance to the situation that the chat bot is able to convey?

But that nuance is probably limited to a paragraph or two of text. There's nothing the chatbot knows about the returns process at a specific company that isn't contained in that paragraph. The question is just whether that paragraph is shown directly to the user, or if it's filtered through an LLM first. The only thing I can think of is that chatbot might be able to rephrase things for confused users and help stop users from ignoring the instructions and going straight to human support.

And it could hallucinate, so you would need to add further validation after the fact

That has nothing to do with AI and is strictly a return policy matter. You can get a return in less than 2 minutes by speaking to a human at Home Depot.

Businesses choose to either prioritize customer experience, or not.

Useful for scammers and spam

We should've known this fact, when we still have those input prompt voice operators that still can't for the life of it, understand some of the shit we tell it. That's the direction I saw this whole AI thing going and had a hunch that it was going to plummet because the big new shiny tech isn't all that it was cracked up to be.

To call it 'ending' though is a stretch. No, it'll be improved in time and it'll come back when it's more efficient. We're only seeing the fundamental failures of expectancy vs reality in the current state. It's too early to truly call it.

It's on the falling edge of the hype curve. It's quite expected, and you're right about where it's headed. It can't do everything people want/expect but it can do some things really well. It'll find its niche and people will continue to refine it and find new uses, but it'll never be the threat/boon folks have been expecting.

People are using it for things it's not good at thinking it'll get better. And it has to an extent. It is technically very capable of writing prose or drawing pictures, but it lacks any semblance of artistry and it always will. I've seen trained elephants paint pictures, but they are interesting for the novelty, not for their expression. AI could be the impetus for more people to notice art and what makes good art special.

hot take: chatbots are actually kinda useful for problem solving but its not the best at it

We should be using AI to pump the web with nonsense content that later AI will be trained on as an act of sabotage. I understand this is happening organically; that's great and will make it impossible to just filter out AI content and still get the amount of data they need.

That sounds like dumping trash in the oceans so ships can't get through the trash islands easily anymore and become unable to transport more trashy goods. Kinda missing the forest for the trees here.

My shitposting will make AI dumber all on its own; feedback loop not required.

Alternatively, and possibly almost as useful, companies will end up training their AI to detect AI content so that they don't train on AI content. Which would in turn would give everyone a tool to filter out AI content. Personally, I really like the apps that poison images when they're uploaded to the internet.

Bold of you to assume companies will release their AI detection tools

So if I were to get this straight, the entire logic is that due to big hype, it fits the pattern or other techs becoming useful… that’s sooo not a guarantee, so many big hype stuff have died.