It's not useless, it's saying you can't afford the better quality product you dirty, dirty poor.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

And boy, isn't that true

That’s a good summary. Google Gemini is no better. Type in a question and it starts off great but then devolves into other brands, other steps to do something that isn’t related to the thing you asked. It’s just terrible and someone will sue them over it next year. Just wait.

Am i guessing it right that the XTX uses 50% more energy for the 20% more power?

You can do this with practically any versus question and get hilarious results

This doesn't appear to be comparing them, though? Just explaining what two acronyms are?

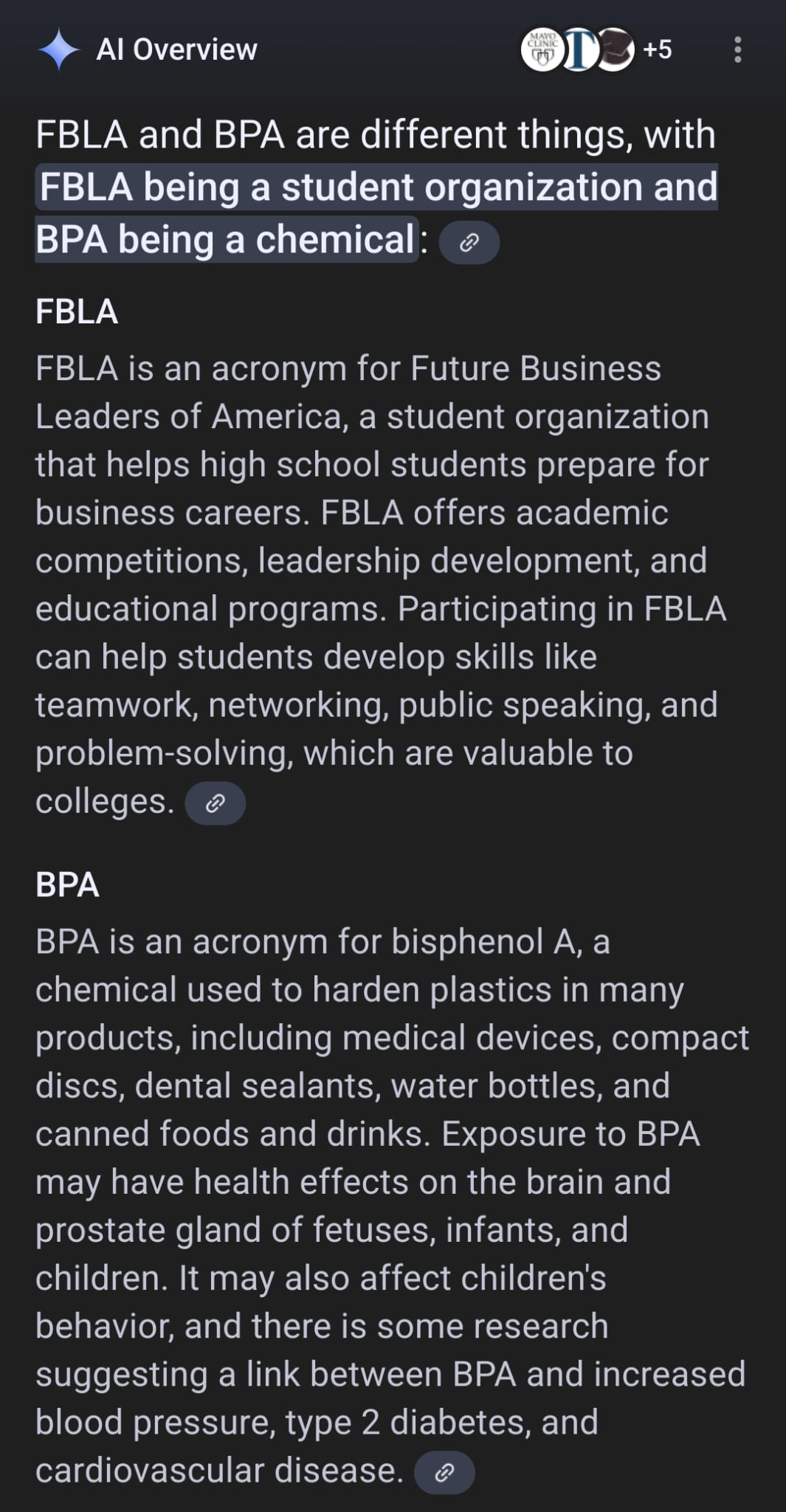

Yeah I should have mentioned the context is FBLA, and Google partially fixed the prompt.

Original from a few weeks ago:

BPA is another student org called Business Professionals of America

The AI ignores the subject context and just compares whatever is the most common acronym.

They lazy patched it by making the model do a subject check on the result, but not on the prompt so it still comes back with the chemical lol.

First of all, yes

Second option is no

When presented with yes / no pick no, no is the clear yes

Garbage in, something something

Hello, fellow humans. I too am human, just like you! I have skin, and blood, and guts inside me, which is not at all disgusting. Just another day of human!

Won't you share a delicious cup of ~~motor oil~~ lemonaide with me? It's nice and refridgerated, so it will cool down our bodies without the use of cooling fans!

However we too can use cooling fans. They will just be placed on the ceiling, or in a box, or self standing, and oscillating. Not at all inside our bodies, connected to a board controlled by our CPUs that we clearly don't have!

Now come, let us take our colored paper with numbers and pictures of previous human rulers and exchange them for human food prepared by not fully adult humans who haven't matured to the age where their brains develop the ability to care about food sanitation. Then we shall complain that our meal cost too many paper dollars, while recieving less and less potato stick products every year. Ignoring completely the risk of heart disease by indulging in the amounts of food we desire to aquire.

Finally we shall retreat to our place of residence, and complain on the internet that our elected leaders are performing poorly. Rather than ~~terminate the program~~ vote the poor performing humans out, we shall instead complain that it is other humans fault for voting them in. Making no attempt to change our broken system that has been broken our entire existence, with no signs of improving. Instead every 4 years we will make an effort to write down names of people we've already complained about in the hopes that enough people write down the same names, and that will fix the problem.

Oh. Shall I request amazon.com to purchase more fans and cooling units? The news being reported that tempatures will soon reach 130F on a regular basis, and all humans will slowly perish.

Shall I share photographs of the new CEO of starbucks who's daily commute involves a personal jet aircraft, which surely isn't compounding the problem at all?

Jokes aside (and this whole AI search results thing is a joke) this seems like an artifact of sampling and tokenization.

I wouldn't be surprised if the Gemini tokens for XTX are "XT" and "X" or something like that, so it's got quite a chance of mixing them up after it writes out XT. Add in sampling (literally randomizing the token outputs a little), and I'm surprised it gets any of it right.

I wonder if they couldn't feed the output back into gpt to realize the out makes no sense?