I run an old desktop mainboard as my homelab server. It runs Ubuntu smoothly at loads between 0.2 and 3 (whatever unit that is).

Problem:

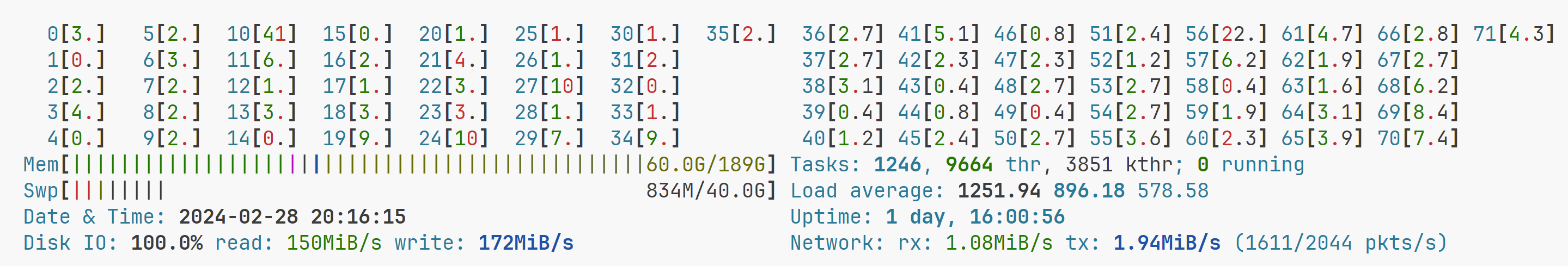

Occasionally, the CPU load skyrockets above 400 (yes really), making the machine totally unresponsive. The only solution is the reset button.

Solution:

- I haven't found what the cause might be, but I think that a reboot every few days would prevent it from ever happening. That could be done easily with a crontab line.

- alternatively, I would like to have some dead-simple script running in the background that simply looks at the CPU load and executes a reboot when the load climbs over a given threshold.

--> How could such a cpu-load-triggered reboot be implemented?

edit: I asked ChatGPT to help me create a script that is started by crontab every X minutes. The script has a kill-threshold that does a kill-9 on the top process, and a higher reboot-threshold that ... reboots the machine. before doing either, or none of these, it will write a log line. I hope this will keep my system running, and I will review the log file to see how it fares. Or, it might inexplicable break my system. Fun!