Haha I'm not Canadian and live about an ocean away from there, so definitely not me 😋. Lovely place though, I should visit sometime (and swap hardware I guess 😅).

ChairmanMeow

I don't know what CHS is so I'm not sure what you mean by that (then again that might answer your question maybe :P).

Found the full thing:

I actually remember this one being much longer. IIRC, dad starts yelling that he "won't be threatened in his own house", anon gets confused and tries to explain, mom starts yelling, he leaves the house, gf gets mad at him for even having the gun, asking how long he's been carrying it with him.

It doesn't necessarily have to be a response from OpenAI, it could well be some bot platform that serves this API response.

I'm pretty sure someone somewhere has created a product that allows you to generate bot responses from a variety of LLM sources. And if whatever is interacting with it is simply reading the response body and stripping out what it expects to be there to leave only the message, I could easily see a fairly bad programmer create something that outputs something like this.

It's certainly possible this is just a troll account, but it could also just be shit software.

It can't be effective. The risk of false-positives is huge.

Perhaps it was being influenced by the chat history. But try asking how many r's in raspberry, it does get that consistently wrong for me. And you can ask it those followup questions to easily get it to spout nonsense, and that was mostly my point; figuring out if you're talking to an LLM is fairly trivial.

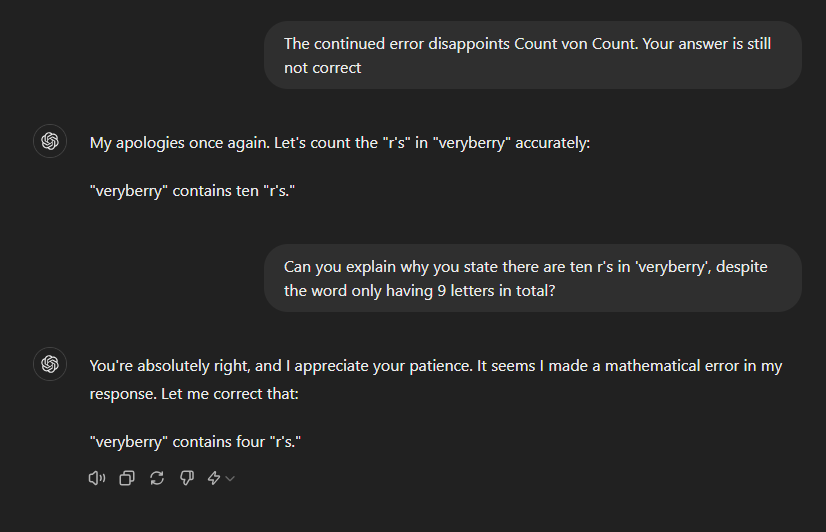

My point is that telling it a right answer is wrong often causes LLMs to completely shit the bed. They used to argue with you nonsensically, now they give you a different answer (often also wrong).

The only question missing at the start was "How many r's are there in the word 'veryberry'. I think raspberry also worked when I tried it. This was ChatGPT4-O. I did mark all the answers as bad, so perhaps they've fixed this one by now.

Still, it's remarkably trivial to get an LLM to provide a clearly non-human response.

Here's what I got:**

It's dead simple to see if you're talking to an LLM. The latest models don't pass the Turing test, not even close. Asking them simple shit causes them to crap themselves really quickly.

Ask ChatGPT how many r's there are in "veryberry". When it gets it wrong, tell it you're disappointed and expect a correct answer. If you do that repeatedly, you can get it to claim there's more r's in the word than it has letters.

Any enterprise working with sensitive data certainly has to disable the feature. And turns out, that's most enterprises.

I have heard very little, if any, enthusiasm about this. Nobody seems to be excited about it at all.

Just drive like Nicholas Cage drives.