How's the density compared to LFP?

avidamoeba

LFP is not new. It's been in cars since Fisker integrated A123's batteries. CATL and other manufacturers have been churning out LFP in volume for over a decade now.

What makes you think VC won't have another product in the pipeline to take over from most users exiting BlueSky? Just like they had BS ready to scoop up most of the Xodus.

Not a critique really, the Fediverse will still be there and will get some people every time.

The .world instance is very well funded - they take more money than they spend. Not profit since they're a nonprofit, they save it as well as give to others. Meanwhile the Lemmy developers still don't have proper full time funding. This information is public. If I were you I'd subscribe to fund the developers for now. Lemmy.ca is also well funded.

How many does Threads have?

What I took from this post is that every living room / home theater setup needs a server rack instead of a HiFi rack. Dudnt matter what you thrown in it, it looks badass.

Thank you!

Imagine I'm an idiot, do you have a link or description for where to find those? I looked for them some time ago and found nothing. 🥹

Where do you get installation media for Windows LTSC?

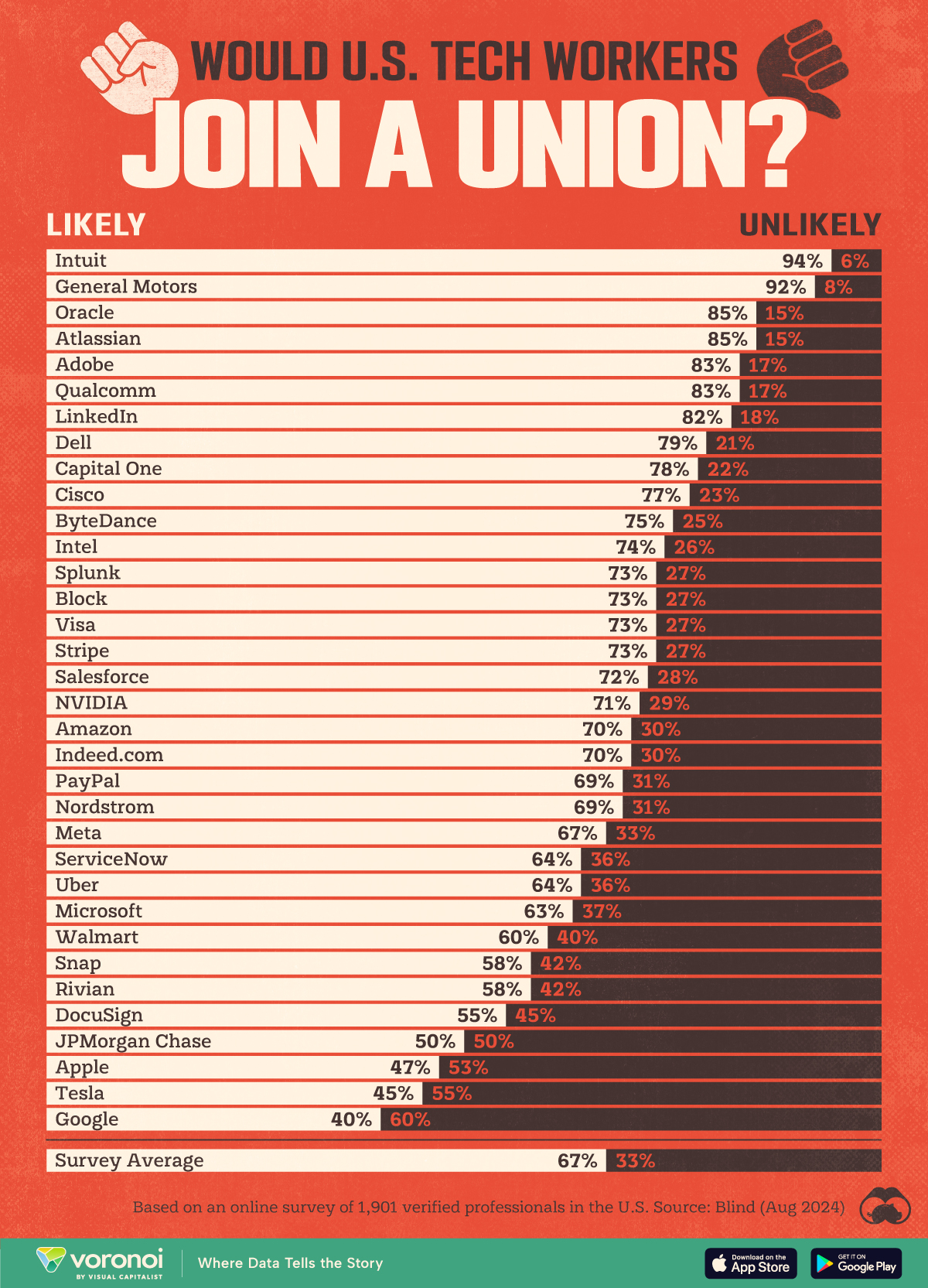

This is beautiful! It's like a textbook example for everyone paying attention to draw crisp conclusions for how the system works.

Yes I do and LFP has been manufactured and integrated at scale for a very long time.