Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

The Speznasz.

Little pig boy comes from the dirt.

State Senator adjusts bifocals

"What the hell is a poop knife?"

I understand the irony. But can we not pretend they blindly used an output or even generated a full page. It was a specific section to provide a technical definition of “what is a deepfake”.

“I was really struggling with the technical aspects of how to define what a deepfake was. So I thought to myself, ‘Well, why not ask the subject matter expert (i do not agree with that wording, lol) , ChatGPT?’” Kolodin said.

The legislator from Maricopa County said he “uploaded the draft of the bill that I was working on and said, you know, please, please put a subparagraph in with that definition, and it spit out a subparagraph of that definition.”

“There’s also a robust process in the Legislature,” Kolodin continued. “If ChatGPT had effed up some of the language or did something that would have been harmful, I would have spotted it, one of the 10 stakeholder groups that worked on or looked at this bill, the ACLU would have spotted, the broadcasters association would have spotted it, it would have got brought out in committee testimony.”

But Kolodin said that portion of the bill fared better than other parts that were written by humans. “In fact, the portion of the bill that ChatGPT wrote was probably one of the least amended portions,” he said.

I do not agree on his statement that any mistakes made by ai could also be made by humans. The reasoning and errors in reasoning is quite different in my experience but the way chatgpt was used is absolutely fair.

No kidding. When I read that, my first thought was, "He's clearly at least above the median intelligence of his fellow Arizona GOP reps, if not in the top 10% of their entire conference"

Anyone who read the article AND has experience with the Arizona GOP, probably thought the same thing.

The Arizona GOP collects some of the dumbest people alive.

I get this feeling this will generally be the peak of generative AI. Used for assistance when needed and with lots of oversight. The problem is that not all people bother to check the AI's work.

That’s the point, literally. These tools don’t make some idiot all of a sudden a genius. It’s for already competent experts to expedite their work. They are the oversight

These types of things are exactly what Generative AI models are good for, as much as Internet people don’t want to hear it.

Things that are massively repeatable based off previous versions (like legislation, contracts, etc) are pretty much perfect for it. These are just tools for already competent people. So in theory you have GenAI crank out the boring stuff and have an expert “fill in the blanks” so to speak

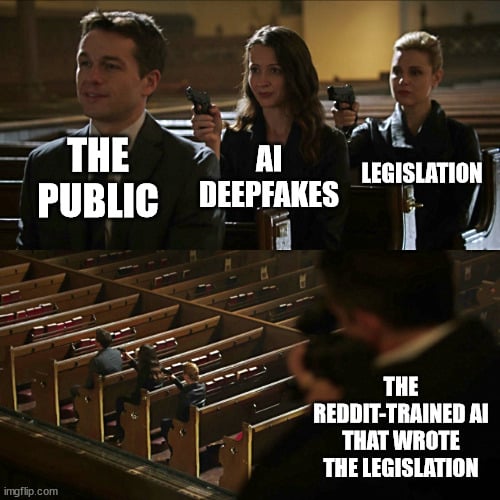

True, if the LLM is training on those legal documents. Less true if its trained on whatever random garbage was scrapped out of reddit.

At least this time the Rep. was actually reviewing the output, so thats responsible at least.

The stupid. It hurts.

Yeah, side hurts. 🤣

And yet again it cynically amuses me that AI has become "artificial" intelligence in the sense of "fake."

It's a shabby substitute for real intelligence, used by people who don't possess any of their own to impress other people who don't possess any of their own.

That's a use. But not their only use.

This is actually true.

Most notably to me, the ability to sift through and collate enormous amounts of data has led to surprising things like diagnosing diabetes through retinal scans.

But those sorts of things, beneficial and impressive though they might be, remain at the fringe of AI research for the simple reason that those sorts of uses are too niche to provide the revenue stream that all of the bubble-building corporate parasites demand. Their focus is on the AI-as-a-substitute-for-real-intelligence aspect (and increasingly "AI" as just a meaningless marketing buzzword), since that's where the money is. And unfortunately but not coincidentally, that's where most of the public attention is too.

He argues that any shortcomings associated with using ChatGPT to write part of a law would also be present if humans take the reins. Kolodin said he didn’t see any pitfalls “that I don’t also see with relying on legislative attorneys to draft up legislation.”

Last I checked humans carried 100% of the liability.

Someone should run all lawyer books through Chat-GPT so we can have a free opensource lawyer in our phones.

During a traffic stop: "Hold on officer, I gotta ask my lawyer. It says to shut the hell up."

Cop still shoots him in the head so he can learn his lesson. He pulled out his phone!

Or lawyer-bot cites some sovereign citizen crap as if it were established legal precedent. "You can't prosecute me in this court! Your flag has a gold fringe on it!"

🙊 and the group think nonsense continues...

Y'all know those grammar checking thingies? Yeah, same basic thing. You know when you're stuck writing something and your wording isn't quite what you'd like? Maybe you ask another person for ideas; same thing.

Is it smart to ask AI to write something outright; about as smart as asking a random person on the street to do the same. Is it smart to use proprietary AI that has ulterior political motives; things might leak, like this, by proxy. Is it smart for people to ask others to proof read their work? Does it matter if that person is a grammar checker that makes suggestions for alternate wording and has most accessible human written language at its disposal.

spoiler

asdfasfasfasfas

I don't see any issue whatsoever in what he did. The model can draw meaning across all human language in a way humans are not even capable of doing. I could go as far as creating a training corpus based on all written works of the country's founding members and generate a nearly perfect simulacrum that includes much of their personality and politics.

The AI is not really the issue here. The issue is how well the person uses the tool available and how they use it. By asking it for writing advice for word specificity, it shouldn't matter so long as the person is proof reading it and it follows their intent. If a politician's significant other writes a sentence of a speech, does it matter. None of them write their own sophist campaign nonsense or their legislative works.

A new meme I expect to take hold is how tempting ChatGPT is. And how the temptation will only grow as LLMs and similar get better, and as our externalized knowledge habits change.

The problem is that tools use to detect AI writing are not accurate. At the end of the day as long as the information is worded correct and the information is correct that's that matters. When you have AI write an argument to cases that don't exist as a defense lawyer... that's when theirs problems

This chud uploaded potentially sensitive information to a public service. People really need education on how to intelligently use these services.

This chud uploaded potentially sensitive information to a public service.

A bill draft, which eventually/maybe gets signed and is public by its very nature is sensitive?

People really need education on how to intelligently use these services.

Agreed on principle, but I don't see how what he did was wrong...other than calling ChatGPT a subject matter expert.