Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

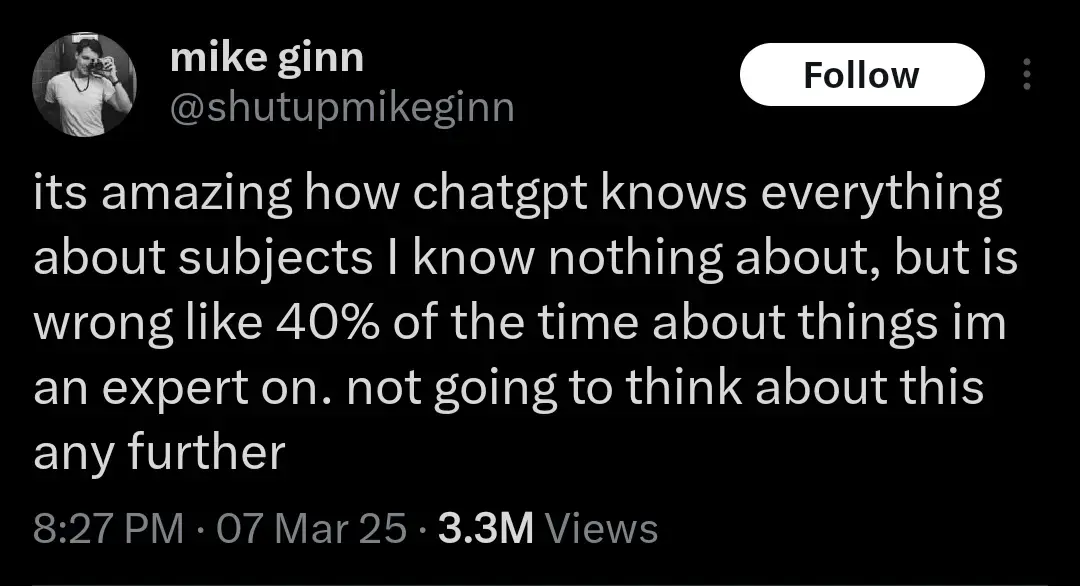

Another realization might be that the humans whose output ChatGPT was trained on were probably already 40% wrong about everything. But let's not think about that either. AI Bad!

This is a salient point that's well worth discussing. We should not be training large language models on any supposedly factual information that people put out. It's super easy to call out a bad research study and have it retracted. But you can't just explain to an AI that that study was wrong, you have to completely retrain it every time. Exacerbating this issue is the way that people tend to view large language models as somehow objective describers of reality, because they're synthetic and emotionless. In truth, an AI holds exactly the same biases as the people who put together the data it was trained on.

I'll bait. Let's think:

-there are three humans who are 98% right about what they say, and where they know they might be wrong, they indicate it

-

now there is an llm (fuck capitalization, I hate the ways they are shoved everywhere that much) trained on their output

-

now llm is asked about the topic and computes the answer string

By definition that answer string can contain all the probably-wrong things without proper indicators ("might", "under such and such circumstances" etc)

If you want to say 40% wrong llm means 40% wrong sources, prove me wrong

It's more up to you to prove that a hypothetical edge case you dreamed up is more likely than what happens in a normal bell curve. Given the size of typical LLM data this seems futile, but if that's how you want to spend your time, hey knock yourself out.

What the fuck is vibe coding... Whatever it is I hate it already.

Using AI to hack together code without truly understanding what your doing

Andrej Karpathy (One of the founders of OpenAI, left OpenAI, worked for Tesla back in 2015-2017, worked for OpenAI a bit more, and is now working on his startup "Eureka Labs - we are building a new kind of school that is AI native") make a tweet defining the term:

There's a new kind of coding I call "vibe coding", where you fully give in to the vibes, embrace exponentials, and forget that the code even exists. It's possible because the LLMs (e.g. Cursor Composer w Sonnet) are getting too good. Also I just talk to Composer with SuperWhisper so I barely even touch the keyboard. I ask for the dumbest things like "decrease the padding on the sidebar by half" because I'm too lazy to find it. I "Accept All" always, I don't read the diffs anymore. When I get error messages I just copy paste them in with no comment, usually that fixes it. The code grows beyond my usual comprehension, I'd have to really read through it for a while. Sometimes the LLMs can't fix a bug so I just work around it or ask for random changes until it goes away. It's not too bad for throwaway weekend projects, but still quite amusing. I'm building a project or webapp, but it's not really coding - I just see stuff, say stuff, run stuff, and copy paste stuff, and it mostly works.

People ignore the "It's not too bad for throwaway weekend projects", and try to use this style of coding to create "production-grade" code... Lets just say it's not going well.

source (xcancel link)

people tend to become dependent upon AI chatbots when their personal lives are lacking. In other words, the neediest people are developing the deepest parasocial relationship with AI

Preying on the vulnerable is a feature, not a bug.

These same people would be dating a body pillow or trying to marry a video game character.

The issue here isn’t AI, it’s losers using it to replace human contact that they can’t get themselves.

You labeling all lonely people losers is part of the problem

If you are dating a body pillow, I think that's a pretty good sign that you have taken a wrong turn in life.

What if it's either that, or suicide? I imagine that people who make that choice don't have a lot of choice. Due to monetary, physical, or mental issues that they cannot make another choice.

I'm confused. If someone is in a place where they are choosing between dating a body pillow and suicide, then they have DEFINITELY made a wrong turn somewhere. They need some kind of assistance, and I hope they can get what they need, no matter what they choose.

I think my statement about "a wrong turn in life" is being interpreted too strongly; it wasn't intended to be such a strong and absolute statement of failure. Someone who's taken a wrong turn has simply made a mistake. It could be minor, it could be serious. I'm not saying their life is worthless. I've made a TON of wrong turns myself.

Trouble is your statement was in answer to @morrowind@lemmy.ml's comment that labeling lonely people as losers is problematic.

Also it still looks like you think people can only be lonely as a consequence of their own mistakes? Serious illness, neurodivergence, trauma, refugee status etc can all produce similar effects of loneliness in people who did nothing to "cause" it.

That's an excellent point that I wasn't considering. Thank you for explaining what I was missing.

Thanks!

That was clear from GPT-3, day 1.

I read a Reddit post about a woman who used GPT-3 to effectively replace her husband, who had passed on not too long before that. She used it as a way to grief, I suppose? She ended up noticing that she was getting too attach to it, and had to leave him behind a second time...

Ugh, that hit me hard. Poor lady. I hope it helped in some way.

TIL becoming dependent on a tool you frequently use is "something bizarre" - not the ordinary, unsurprising result you would expect with common sense.

If you actually read the article Im 0retty sure the bizzarre thing is really these people using a 'tool' forming a roxic parasocial relationship with it, becoming addicted and beginning to see it as a 'friend'.

That is peak clickbait, bravo.

But how? The thing is utterly dumb. How do you even have a conversation without quitting in frustration from it's obviously robotic answers?

But then there's people who have romantic and sexual relationships with inanimate objects, so I guess nothing new.

If you're also dumb, chatgpt seems like a super genius.

Its too bad that some people seem to not comprehend all chatgpt is doing is word prediction. All it knows is which next word fits best based on the words before it. To call it AI is an insult to AI... we used to call OCR AI, now we know better.

LLM is a subset of ML, which is a subset of AI.

Do you guys remember when internet was the thing and everybody was like: "Look, those dumb fucks just putting everything online" and now is: "Look at this weird motherfucker that don't post anything online"

Remember when people used to say and believe "Don't believe everything you read on the internet?"

I miss those days.

I remember when internet was a place

I mean, I stopped in the middle of the grocery store and used it to choose best frozen chicken tenders brand to put in my air fryer. …I am ok though. Yeah.

At the store it calculated which peanuts were cheaper - 3 pound of shelled peanuts on sale, or 1 pound of no shell peanuts at full price.