we are not relevant enough for propaganda bots lol

ademir

I appreciate people like you.

- XMPP

- Lemmy

- Akkoma

- Peertube

Go to hell (na)zionist

O puto ainda tentou te reportar kkkkkkkkkkkk

of course they matter, otherwise they wouldn't exist. Think for a second before answering non-sense.

There is a reason this type of platform has a voting system.

I agree, one thing that should be available when choosing an instance is to be able to easily tell the blocks from instances.

Also, IMHO, 90% of instance blocks are childish drama. Lots of instances with the same world view block each other because of admins fighting, and this problem is not exclusive to Lemmy, all activitypub platforms suffer from it.

The ideal model would be instances be more tolerable and use instance block as last resort only for SPAM or Crimes. And the user itself ban what they don't want to see.

still blocks e.g. threads.net.

Anyway Lemmy can't interact with threads.net.

Verification: Implement robust account verification and clearly label bot accounts.

☑ Clear label for bot accounts

☑ 3 different levels of captcha verification (I use the intermediary level in my instance and rarely deal with any bot)

Behavioral Analysis: Use algorithms to identify bot-like behavior.

Profiling algorithms seems like something people are running away when they choose fediverse platforms, this kind of solution have to be very well thought and communicated.

User Reporting: Enable easy reporting of suspected bots by users.

☑ Reporting in lemmy is just as easy as anywhere else.

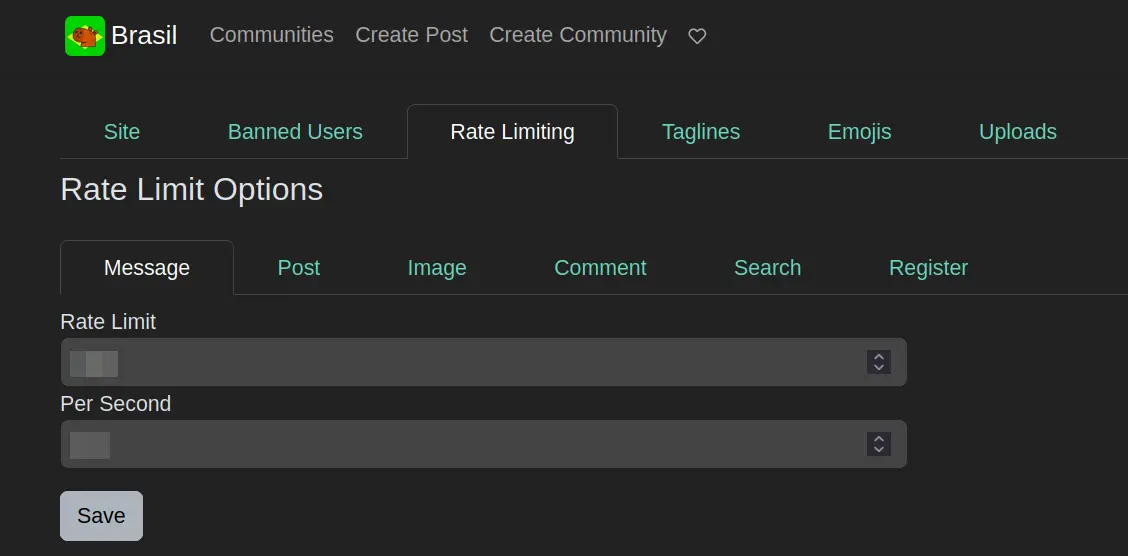

Rate Limiting: Limit posting frequency to reduce spam.

☑ Like this?

image

Content Moderation: Enhance tools to detect and manage bot-generated content.

What do you suggest other than profiling accounts?

User Education: Provide resources to help users recognize bots.

This is not up to Lemmy development team.

Adaptive Policies: Regularly update policies to counter evolving bot tactics.

Idem.

How could one help them?

That's actually... quite accurate.