this post was submitted on 05 Feb 2024

660 points (88.1% liked)

Memes

54290 readers

912 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 6 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

They're kind of right. LLMs are not general intelligence and there's not much evidence to suggest that LLMs will lead to general intelligence. A lot of the hype around AI is manufactured by VCs and companies that stand to make a lot of money off of the AI branding/hype.

Yeah this sounds about right. What was OP implying I’m a bit lost?

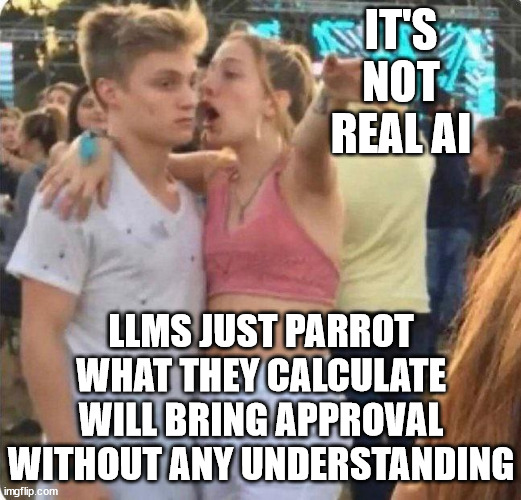

I believe they were implying that a lot of the people who say "it's not real AI it's just an LLM" are simply parroting what they've heard.

Which is a fair point, because AI has never meant "general AI", it's an umbrella term for a wide variety of intelligence like tasks as performed by computers.

Autocorrect on your phone is a type of AI, because it compares what words you type against a database of known words, compares what you typed to those words via a "typo distance", and adds new words to it's database when you overrule it so it doesn't make the same mistake.

It's like saying a motorcycle isn't a real vehicle because a real vehicle has two wings, a roof, and flies through the air filled with hundreds of people.

I've often seen people on Lemmy confidently state that current "AI" thinks and learns exactly like humans and that LLMs work exactly like human brains, etc.

Are you sure this wasn't just people stating that when it comes to training on art there is no functional difference in the sense that both humans and AI need to see art to make it?

Do you mean in the everyday sense or the academic sense? I think this is why there's such grumbling around the topic. Academically speaking that may be correct, but I think for the general public, AI has been more muddled and presented in a much more robust, general AI way, especially in fiction. Look at any number of scifi movies featuring forms of AI, whether it's the movie literally named AI or Terminator or Blade Runner or more recently Ex Machina.

Each of these technically may be presenting general AI, but for the public, it's just AI. In a weird way, this discussion is sort of an inversion of what one usually sees between academics and the public. Generally academics are trying to get the public not to use technical terms loosely, yet here some of the public is trying to get some of the tech/academic sphere to not, at least as they think, use technical terms loosely.

Arguably it's from a misunderstanding, but if anyone should understand the dynamics of language, you'd hope it would be those trying to calibrate machines to process language.

Well, that's the issue at the heart of it I think.

How much should we cater our choice of words to those who know the least?

I'm not an academic, and I don't work with AI, but I do work with computers and I know the distinction between AI and general AI.

I have a little irritation at the theme, given I work in the security industry and it's now difficult to use the more common abbreviation for cryptography without getting Bitcoin mixed up in everything.

All that aside, the point is that people talking about how it's not "real AI" often come across as people who don't know what they're talking about, which was the point of the image.

The funny part is, as I mention in my comment, isn't that how both parties to these conversations feel? The problem is they're talking past each other, but the worst part is, arguably the more educated participant should be more apt to recognize this and clarify or better yet, ask for clarification so they can see where the disconnect is emerging to improve communication.

Also, let's remember that it's not the laypeople describing the technology in general personified terms like "learning" or "hallucinating", which furthers some of the grumbling.

Well, I don't generally expect an academic level of discourse out of image macros found on the Internet.

Usually when I see people talking about it, I do see people making clarifying comments and asking questions like you describe. Sorta like when I described how AI is an umbrella term.

I'm not sure I'd say that learning and hallucinating are personified terms. We see both of those out of any organism complex enough to have something that works like a nervous system, for example.

I believe OP is attempting to take on an army of straw men in the form of a poorly chosen meme template.

No people say this constantly it's not just a strawman

I think OP implied that AI is neat.

I guess that no matter what they are or what you call them they still can be useful

Pretty sure the meme format is for something you get extremely worked up about and want to passionately tell someone, even in inappropriate moments, but no one really gives a fuck

Depends on what you mean by general intelligence. I've seen a lot of people confuse Artificial General Intelligence and AI more broadly. Even something as simple as the K-nearest neighbor algorithm is artificial intelligence, as this is a much broader topic than AGI.

Wikipedia gives two definitions of AGI:

If some task can be represented through text, an LLM can, in theory, be trained to perform it either through fine-tuning or few-shot learning. The question then is how general do LLMs have to be for one to consider them to be AGIs, and there's no hard metric for that question.

I can't pass the bar exam like GPT-4 did, and it also has a lot more general knowledge than me. Sure, it gets stuff wrong, but so do humans. We can interact with physical objects in ways that GPT-4 can't, but it is catching up. Plus Stephen Hawking couldn't move the same way that most people can either and we certainly wouldn't say that he didn't have general intelligence.

I'm rambling but I think you get the point. There's no clear threshold or way to calculate how "general" an AI has to be before we consider it an AGI, which is why some people argue that the best LLMs are already examples of general intelligence.

Well, I mean the ability to solve problems we don't already have the solution to. Can it cure cancer? Can it solve the p vs np problem?

And by the way, wikipedia tags that second definition as dubious as that is the definition put fourth by OpenAI, who again, has a financial incentive to make us believe LLMs will lead to AGI.

Not only has it not been proven whether LLMs will lead to AGI, it hasn't even been proven that AGIs are possible.

No it can't. If the task requires the LLM to solve a problem that hasn't been solved before, it will fail.

Exams often are bad measures of intelligence. They typically measure your ability to consume, retain, and recall facts. LLMs are very good at that.

Ask an LLM to solve a problem without a known solution and it will fail.

The ability to interact with physical objects is very clearly not a good test for general intelligence and I never claimed otherwise.

Here is an alternative Piped link(s):

getting there

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I'm open-source; check me out at GitHub.

It depends a lot on how we perceive "intelligence". It's a lot more vague of a term than most, so people have very different views of it. Some people might have the idea of it meaning the response to stimuli & the output (language or art or any other form) being indistinguishable from humans. But many people may also agree that whales/dolphins have the same level of, or superior, "intelligence" to humans. The term is too vague to really prescribe with confidence, and more importantly people often use it to mean many completely different concepts ("intelligence" as a measurable/quantifiable property of either how quickly/efficiently a being can learn or use knowledge or more vaguely its "capacity to reason", "intelligence" as the idea of "consciousness" in general, "intelligence" to refer to amount of knowledge/experience one currently has or can memorize, etc.)

In computer science "artificial intelligence" has always simply referred to a program making decisions based on input. There was never any bar to reach for how "complex" it had to be to be considered AI. That's why minecraft zombies or shitty FPS bots are "AI", or a simple algorithm made to beat table games are "AI", even though clearly they're not all that smart and don't even "learn".

Even sentience is on a scale. Even cows or dogs or parrots or crows are sentient, but not as much as we are. Computers are not sentient yet, but one day they will be. And then soon after they will be more sentient than us. They'll be able to see their own brains working, analyze their own thoughts and emotions(?) in real time and be able to achieve a level of self reflection and navel gazing undreamed of by human minds! :D

The damn Viet Cong 😒

Only 2 people on the server left alive, knife fight in the center

OP didn't say general intelligence. LLMs mimic what actually intelligent beings do, AKA artificial intelligence.

Claiming AGI is the only "real" AI is like claiming Swiss army knives are the only "real" knives. It's just silly.

But also the people who seem to think we need a magic soul to perform useful work is way way too high.

The main problem is Idiots seem to have watched one too many movies about robots with souls and gotten confused between real life and fantasy - especially shitty journalists way out their depth.

This big gotcha 'they don't live upto the hype' is 100% people who heard 'ai' and thought of bad Will Smith movies. LLMs absolutely live upto the actual sensible things people hoped and have exceeded those expectations, they're also incredibly good at a huge range of very useful tasks which have traditionally been considered as requiring intelligence but they're not magically able everything, of course they're not that's not how anyone actually involved in anything said they would work or expected them to work.

No idea why you're downvoted. This is correct.