I dont think the community is generally against AI, there's plenty of FOSS projects. They just don't like cashgrabs, enshittification and sending personal data to someone else's computer.

Linux

From Wikipedia, the free encyclopedia

Linux is a family of open source Unix-like operating systems based on the Linux kernel, an operating system kernel first released on September 17, 1991 by Linus Torvalds. Linux is typically packaged in a Linux distribution (or distro for short).

Distributions include the Linux kernel and supporting system software and libraries, many of which are provided by the GNU Project. Many Linux distributions use the word "Linux" in their name, but the Free Software Foundation uses the name GNU/Linux to emphasize the importance of GNU software, causing some controversy.

Rules

- Posts must be relevant to operating systems running the Linux kernel. GNU/Linux or otherwise.

- No misinformation

- No NSFW content

- No hate speech, bigotry, etc

Related Communities

Community icon by Alpár-Etele Méder, licensed under CC BY 3.0

sending personal data to someone else’s computer.

I think this is spot on. I think it's exciting with LLMs but I'm not gonna give the huge corporations my data, nor anyone else for that matter.

Reminder that we don't even have AI yet, just learning machine models, which are not the same thing despite wide misuse of the term AI.

Have you mentioned that in gaming forums aswell when they talked about AI?

AI is a broad term and can mean many different things, it does not need to mean 'true' AI

I won't rehash the arguments around "AI" that others are best placed to make.

My main issue is AI as a term is basically a marketing one to convince people that these tools do something they don't and its causing real harm. Its redirecting resources and attention onto a very narrow subset of tools replacing other less intensive tools. There are significant impacts to these tools (during an existential crisis around our use and consumption of energy). There are some really good targeted uses of machine learning techniques but they are being drowned out by a hype train that is determined to make the general public think that we have or are near Data from Star Trek.

Addtionally, as others have said the current state of "AI" has a very anti FOSS ethos. With big firms using and misusing their monopolies to steal, borrow and coopt data that isn't theirs to build something that contains that's data but is their copyright. Some of this data is intensely personal and sensitive and the original intent behind the sharing is not for training a model which may in certain circumstances spit out that data verbatim.

Lastly, since you use the term Luddite. Its worth actually engaging with what that movement was about. Whilst its pitched now as generic anti-technology backlash in fact it was a movement of people who saw what the priorities and choices in the new technology meant for them: the people that didn't own the technology and would get worse living and work conditions as a result. As it turned out they were almost exactly correct in thier predictions. They are indeed worth thinking about as allegory for the moment we find ourselves in. How do ordinary people want this technology to change our lives? Who do we want to control it? Given its implications for our climate needs can we afford to use it now, if so for what purposes?

Personally, I can't wait for the hype train to pop (or maybe depart?) so we can get back to rational discussions about the best uses of machine learning (and computing in general) for the betterment of all rather than the enrichment of a few.

It's a surprisingly good comparison especially when you look at the reactions: frame breaking vs data poisoning.

The problem isn't progress, the problem is that some of us disagree with the Idea that what's being touted is actual progress. The things llms are actually good at they've being doing for years (language translations) the rest of it is so inexact it can't be trusted.

I can't trust any llm generated code because it lies about what it's doing, so I need to verify everything it generates anyway in which case it's easier to write it myself. I keep trying it and it looks impressive until it ends up at a way worse version of something I could have already written.

I assume that it's the same way with everything I'm not an expert in. In which case it's worse than useless to me, I can't trust anything it says.

The only thing I can use it for is to tell me things I already know and that basically makes it a toy or a game.

That's not even getting into the security implications of giving shitty software access to all your sensitive data etc.

I think the biggest problem is that ai for now is not an exact tool that gets everything right. Because that's just not what it is built to do. Which goes against much of the philosophy of most tools you'd find on your Linux PC.

Secondly: Many people who choose Linux or other foss operating system do so, at least partially, to stay in control over their system which includes knowing why stuff happens and being able to fix stuff. Again that is just not what AI can currently deliver and it's unlikely it will ever do that.

So I see why people just choose to ignore the whole thing all together.

One of the main things that turns people off when the topic of "AI" comes up is the absolutely ridiculous level of hype it gets. For instance, people claiming that current LLMs are a revolution comparable to the invention of the printing press, and that they have such immense potential that if you don't cram them into every product you can all your software will soon be obsolete.

Luddites were not as opposed to new technology as you say it here. They were mainly concerned about what technology would do to whom.

A helpful history right here: https://www.hachettebookgroup.com/titles/brian-merchant/blood-in-the-machine/9780316487740/?lens=little-brown

Tech Enthusiasts: Everything in my house is wired to the Internet of Things! I control it all from my smartphone! My smart-house is bluetooth enabled and I can give it voice commands via alexa! I love the future!

Programmers / Engineers: The most recent piece of technology I own is a printer from 2004 and I keep a loaded gun ready to shoot it if it ever makes an unexpected noise.

No, it is because people in the Linux community are usually a bit more tech-savvy than average and are aware that OpenAI/Microsoft is very likely breaking the law in how they collect data for training their AI.

We have seen that companies like OpenAI completely disregard the rights of the people who created this data that they use in their for-profit LLMs (like what they did to Scarlett Johansson), their rights to control whether the code/documentation/artwork is used in for-profit ventures, especially when stealing Creative Commons "Share Alike" licensed documentation, or GPL licensed code which can only be used if the code that reuses it is made public, which OpenAI and Microsoft does not do.

So OpenAI has deliberately conflated LLM technology with general intelligence (AGI) in order to hype their products, and so now their possibly illegal actions are also being associated with all AI. The anger toward AI is not directed at the technology itself, it is directed at companies like OpenAI who have tried to make their shitty brand synonymous with the technology.

And I haven't even yet mentioned:

- how people are getting fired by companies who are replacing them with AI

- or how it has been used to target civilians in war zones

- or how deep fakes are being used to scam vulnerable people.

The technology could be used for good, especially in the Linux community, but lately there has been a surge of unethical (and sometimes outright criminal) uses of AI by some of the worlds wealthiest companies.

...this looks like it was written by a supervisor who has no idea what AI actually is, but desperately wants it shoehorned into the next project because it's the latest buzzword.

Guys we need AI on our blockchain web3.0 iot. Just imagine the synergy

Gnome and other desktops need to start working on integrating FOSS AI models so that we don't become obsolete.

I don't get it. How Linux destops would become obsolete if they don't have native AI toolsets on DEs? It's not like they have a 80% market share. People who run them as daily drivers are still niche, and most don't even know Linux exists. Most ppl grown up with Microsoft and Apple shoving ads down their throat, using them in schools first hand, and that's all they know and taught. If I need AI, I will find ways to intergrate to my workflow, not by the dev thinks I need it.

And if you really need something like MS's Recall, here is a FOSS version of it.

You can't do machine learning without tons of data and processing power.

Commercial "AI" has been built on fucking over everything that moves, on both counts. They suck power at alarming rates, especially given the state of the climate, and they blatantly ignore copyright and privacy.

FOSS tends to be based on a philosophy that's strongly opposed to at least some of these methods. To start with, FOSS is build around respecting copyright and Microsoft is currently stealing GitHub code, anonymizing it, and offering it under their Copilot product, while explicitly promising companies who buy Copilot that they will insulate them from any legal downfall.

So yeah, some people in the "Linux space" are a bit annoyed about these things, to put it mildly.

Edit: but, to address your concerns, there's nothing to be gained by rushing head-first into new technology. FOSS stands to gain nothing from early adoption. FOSS is a cultural movement not a commercial entity. When and if the technology will be practical and widely available it will be incorporated into FOSS. If it won't be practical or will be proprietary, it won't. There's nothing personal about that.

I'm not against AI. I'm against the hoards of privacy-disrespecting data collection, the fact that everybody is irresponsibility rushing to slap AI into everything even when it doesn't make sense because line go up, and the fact nobody is taking the limitations of things like Large Language Models seriously.

The current AI craze is like the NFTs craze in a lot of ways, but more useful and not going to just disappear. In a year or three the crazed C-level idiots chasing the next magic dragon will settle down, the technology will settle into the places where it's actually useful, and investors will stop throwing all the cash at any mention of AI with zero skepticism.

It's not Luddite to be skeptical of the hot new craze. It's prudent as long as you don't let yourself slip into regressive thinking.

There are already a lot of open models and tools out there. I totally disagree that Linux distros or DEs should be looking to bake in AI features. People can run an LLM on their computer just like they run any other application.

AI just requires a level oftrust all of these companies have not earned.

I get that AI has many problems but at the same time the potential it has is immense, especially as an assistant on personal computers

[Citation needed]

Gnome and other desktops need to start working on integrating FOSS AI models so that we don’t become obsolete.

And this mentality is exactly what AI sceptics criticise. The whole reason why the AI arms race is going on is because every company/organisation seems convinced that sci-fi like AI is right behind the corner, and the first one to get it will capture 100% of the market in their walled garden while everyone else fades into obscurity. They're all so obsessed with this that they don't see a problem with putting in charge a virtual dumbass that is constantly wrong.

just a historical factoid that a lot of people don't realize: the luddites weren't anti technology without reason. they were apprehensive about new technology that threatened their livelihoods, technology that threatened them with starvation and destitution in the pursuit of profit. i think the comparison with opposition to AI is pretty apt, in many cases, honestly.

Gnome and other desktops need to start working on integrating FOSS

In addition to everything everyone else has already said, why does this have anything to do with desktop environments at all? Remember, most open-source software comes from one or two individual programmers scratching a personal itch—not all of it is part of your DE, nor should it be. If someone writes an open-source LLM-driven program that does something useful to a significant segment of the Linux community, it will get packaged by at least some distros, accrete various front-ends in different toolkits, and so on.

However, I don't think that day is coming soon. Most of the things "Apple Intelligence" seems to be intended to fuel are either useless or downright offputting to me, and I doubt I'm the only one—for instance, I don't talk to my computer unless I'm cussing it out, and I'd rather it not understand that. My guess is that the first desktop-directed offering we see in Linux is going to be an image generator frontend, which I don't need but can see use cases for even if usage of the generated images is restricted (see below).

Anyway, if this is your particular itch, you can scratch it—by paying someone to write the code for you (or starting a crowdfunding campaign for same), if you don't know how to do it yourself. If this isn't worth money or time to you, why should it be to anyone else? Linux isn't in competition with the proprietary OSs in the way you seem to think.

As for why LLMs are so heavily disliked in the open-source community? There are three reasons:

- The fact that they give inaccurate responses, which can be hilarious, dangerous, or tedious depending on the question asked, but a lot of nontechnical people, including management at companies trying to incorporate "AI" into their products, don't realize the answers can be dangerously innacurate.

- Disputes over the legality and morality of using scraped data in training sets.

- Disputes over who owns the copyright of LLM-generated code (and other materials, but especiallly code).

Item 1 can theoretically be solved by bigger and better AI models, but 2 and 3 can't be. They have to be decided by the courts, and at an international level, too. We might even be talking treaty negotiations. I'd be surprised if that takes less than ten years. In the meanwhile, for instance, it's very, very dangerous for any open-source project to accept a code patch written with the aid of an LLM—depending on the conclusion the courts come to, it might have to be torn out down the line, along with everything built on top of it. The inability to use LLM output for open source or commercial purposes without taking a big legal risk kneecaps the value of the applications. Unlike Apple or Microsoft, the Linux community can't bribe enough judges to make the problems disappear.

Maybe we'd be warmer towards AI if it wasn't being used as a way for big companies to steal content from smaller creative types in order to fund valueless wealth generators.

Big surprise that a group consisting of people rather than corporations is mad about it.

I don't like AI because it's literally not AI. I know damn well that it is just a data scraping tool that throws a bunch of 'probably right' sentences or images into a proverbial blender and spits out an answer that has no actual comprehension or consistency behind it. It takes only an incredibly basic knowledge of computers and brains to know that we cannot make an actual intelligent program using the Von Neumann style of computer.

I have absolutely no interest in technology being sold to me based on a lie. And if we're not calling this out for the lie it is, then it's going to just keep getting pushed by people trying to make money off the concept at the stock market.

AI is mostly just hype. It's the new blockchain

There are important AI technologies in the past for things like vision processing and the new generative AI has some uses like as a decent (although often inaccurate) summarizer/search engine. However, it's also nothing revolutionary.

It's just a neat peace of tech

But here come MS, Apple, other big companies, and tech bros to push AI hard, and it's so obv that it's all just a big scam to get more of your data and to lock down systems further or be the face of get-rich-quick schemes.

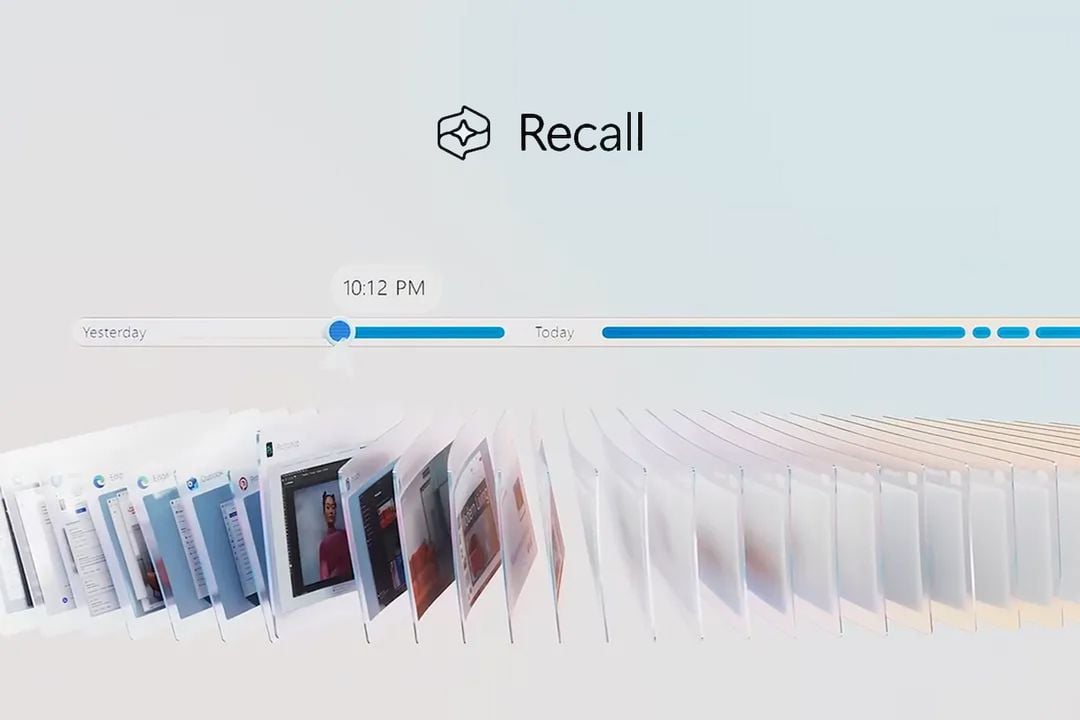

I mean the image you posted is a great example. Recall is a useless feature that also happens to store screenshots of everything you've been doing. You're delusional if you think MS is actually going to keep that totally local. Both MS and the US government are going to have your entire history of using the computer, and that doesn't sit right with FOSS people.

FOSS people tend to be rather technical than the average person, so they don't fall for tech enthusiast nonsense as much.

As someone whose employer is strongly pushing them to use AI assistants in coding: no. At best, it's like being tied to a shitty intern that copies code off stack overflow and then blows me up on slack when it magically doesn't work. I still don't understand why everyone is so excited about them. The only tasks they can handle competently are tasks I can easily do on my own (and with a lot less re-typing.)

Sure, they'll grow over the years, but Altman et al are complaining that they're running out of training data. And even with an unlimited body of training data for future models, we'll still end up with something about as intelligent as a kid that's been locked in a windowless room with books their whole life and can either parrot opinions they've read or make shit up and hope you believe it. I'll think we'll get a series of incompetent products with increasing ability to make wrong shit up on the fly until C-suite moves on to the next shiny bullshit.

That's not to say we're not capable of creating a generally-intelligent system on par with or exceeding human intelligence, but I really don't think LLMs will allow for that.

tl;dr: a lot of woo in the tech community that the linux community isn't as on board with

You should read up on what the luddites actually fought for. They were actually based af.

A lot of mentions of AI from companies is absolute marketing bullshit. And if you can't see that you don't want to.

One of the critical differences between FOSS and commercial software is that FOSS projects don't need to drive sales and consequently also don't need to immediately jump onto technology trends in order to not look like they're lagging behind the competition.

What I've consistently seen from FOSS over the 30 years I've been using it, is that if a technology choice is a good fit for the problem, then it will be adopted into projects where relevant.

I believe that there are use cases where LLM processing is absolutely a good fit, and the projects that need that functionality will use it. What you're less likely to see is 'AI' added to everything, because it isn't generally a good solution to most problems in it's current form.

As an aside, you may be less likely to get good faith interaction with your question while using the term 'luddite' as it is quite pejorative.

Gnome and other desktops need to start working on integrating FOSS AI models so that we don’t become obsolete.

lol no thanks.

Is there no electron wrapper around ChatGPT yet? Jeez we better hurry, imagine having to use your browser like... For pretty much everything else.

Good.

The Luddites were right.

The first problem, as with many things AI, is nailing down just what you mean with AI.

The second problem, as with many things Linux, is the question of shipping these things with the Desktop Environment / OS by default, given that not everybody wants or needs that and for those that don't, it's just useless bloat.

The third problem, as with many things FOSS or AI, is transparency, here particularly training. Would I have to train the models myself? If yes: How would I acquire training data that has quantity, quality and transparent control of sources? If no: What control do I have over the source material the pre-trained model I get uses?

The fourth problem is privacy. The tradeoff for a universal assistant is universal access, which requires universal trust. Even if it can only fetch information (read files, query the web), the automated web searches could expose private data to whatever search engine or websites it uses. Particularly in the wake of Recall, the idea of saying "Oh actually we want to do the same as Microsoft" would harm Linux adoption more than it would help.

The fifth problem is control. The more control you hand to machines, the more control their developers will have. This isn't just about trusting the machines at that point, it's about trusting the developers. To build something the caliber of full AI assistants, you'd need a ridiculous amount of volunteer efforts, particularly due to the splintering that always comes with such projects and the friction that creates. Alternatively, you'd need corporate contributions, and they always come with an expectation of profit. Hence we're back to trust: Do you trust a corporation big enough to make a difference to contribute to such an endeavour without amy avenue of abuse? I don't.

Linux has survived long enough despite not keeping up with every mainstream development. In fact, what drove me to Linux was precisely that it doesn't do everything Microsoft does. The idea of volunteers (by and large unorganised) trying to match the sheer power of a megacorp (with a strict hierarchy for who calls the shots) in development power to produce such an assistant is ridiculous enough, but the suggestion that DEs should come with it already integrated? Hell no

One useful applications of "AI" (machine learning) I could see: Evaluating logs to detect recurring errors and cross-referencing them with other logs to see if there are correlations, which might help with troubleshooting.

That doesn't need to be an integrated desktop assistant, it can just be a regular app.

Really, that applies to every possible AI tool. Make it an app, if you care enough. People can install it for themselves if they want. But for the love of the Machine God, don't let the hype blind you to the issues.

Great technology is invisible.

As long as AI is advertised as being a unique selling point, I'm not interested.

If you think of specific problems it is better to point them out and try think of solutions, not reject the technology as a whole.

Yes. There a problems with the Gnome desktop environment. Without looking at the issue tracker, I can assure you that AI is not the solution to any of them. Even if AI may be a possible solution to a problem, it would probably not be the best one.

Sounds like something an AI would post. Quick, what color are your eyes?