Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

Why would you ask a bot to generate a stereotypical image and then be surprised it generates a stereotypical image. If you give it a simplistic prompt it will come up with a simplistic response.

Kinda makes sense though. I'd expect images where it's actually labelled as "an Indian person" to actually over represent people wearing this kind of clothing. An image of an Indian person doing something mundane in more generic clothing is probably more often than not going to be labelled as "a person doing X" rather than "An Indian person doing X". Not sure why these authors are so surprised by this

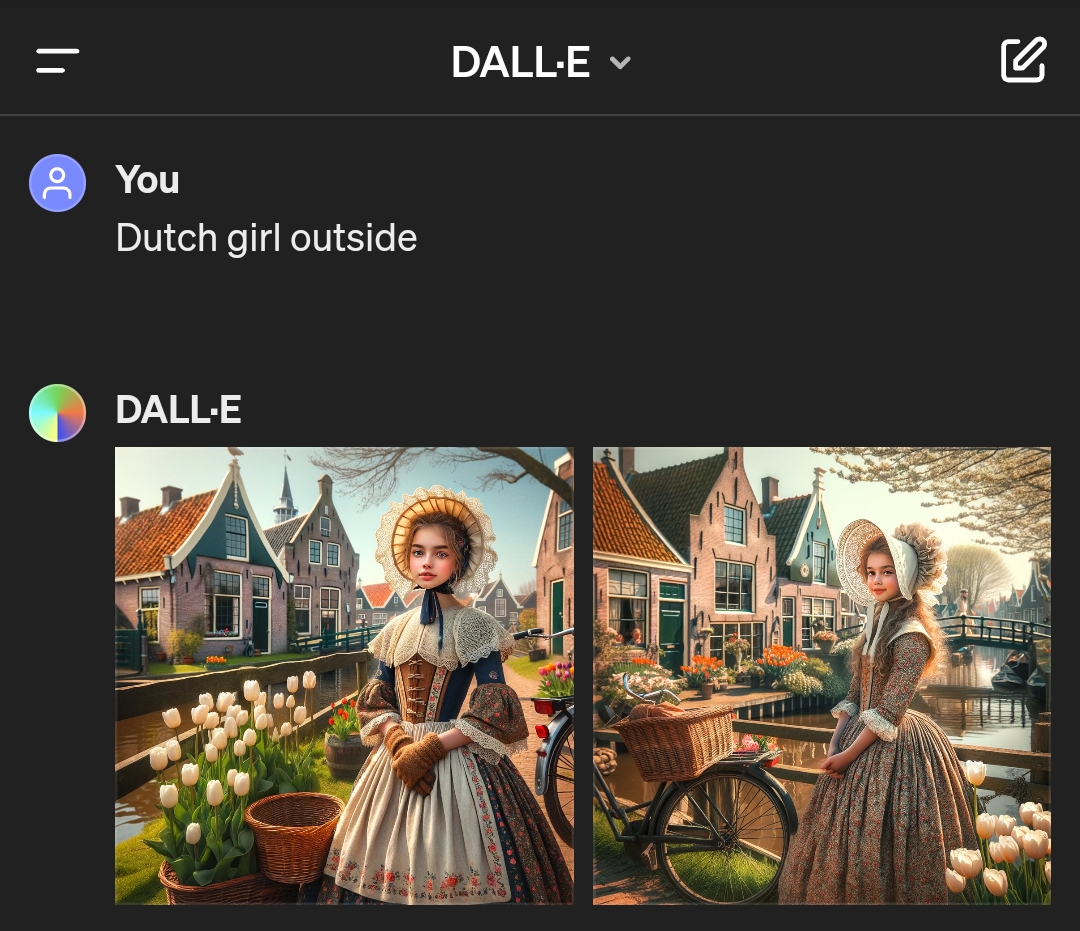

Meanwhile on DALL-E...

I'm just surprised there's no windmill in either of them. Canals, bikes, tulips... Check check check.

Careful, the next generated image is gonna contain a windmill with clogs for blades

Well, they do run on air...

That's Sikh

Indians can be Sikh, not all indians are Hindu

Yes but the gentlemen in the images are also sikhs

Sikh pun

Get down with the Sikhness

not me calling in sikh to work

Articles like this kill me because the nudge it's kinda sorta racist to draw images like the ones they show which look exactly like the cover of half the bollywood movies ever made.

Yes, if you want to get a certain type of person in your image you need to choose descriptive words, imagine gong to an artist snd saying 'I need s picture and almost nothing matters beside the fact the look indian' unless they're bad at their job they'll give you a bollywood movie cover with a guy from rajistan in a turbin - just like their official tourist website does

Ask for an business man in delhi or an urdu shop keeper with an Elvis quiff if that's what you want.

the ones they show which look exactly like the cover of half the bollywood movies ever made.

Almost certainly how they're building up the data. But that's more a consequence of tagging. Same reason you'll get Marvel's Iron Man when you ask an AI generator for "Draw me an iron man". Not as though there's a shortage of metallic-looking people in commercial media, but by keyword (and thanks to aggressive trademark enforcement) those terms are going to pull back a superabundance of a single common image.

imagine gong to an artist snd saying ‘I need s picture and almost nothing matters beside the fact the look indian’

I mean, the first thing that pops into my head is Mahatma Gandhi, and he wasn't typically in a turbine. But he's going to be tagged as "Gandhi" not "Indian". You're also very unlikely to get a young Gandhi, as there are far more pictures of him later in life.

Ask for an business man in delhi or an urdu shop keeper with an Elvis quiff if that’s what you want.

I remember when Google got into a whole bunch of trouble by deliberately engineering their prompts to be race blind. And, consequently, you could ask for "Picture of the Founding Fathers" or "Picture of Vikings" and get a variety of skin tones back.

So I don't think this is foolproof either. Its more just how the engine generating the image is tuned. You could very easily get a bunch of English bankers when querying for "Business man in delhi", depending on where and how the backlog of images are sources. And urdu shopkeeper will inevitably give you a bunch of convenience stores and open-air stalls in the background of every shot.

There are a lot of men in India who wear a turban, but the ratio is not nearly as high as Meta AI’s tool would suggest. In India’s capital, Delhi, you would see one in 15 men wearing a turban at most.

Probably because most Sikhs are from the Punjab region?

Overfitting

It happens

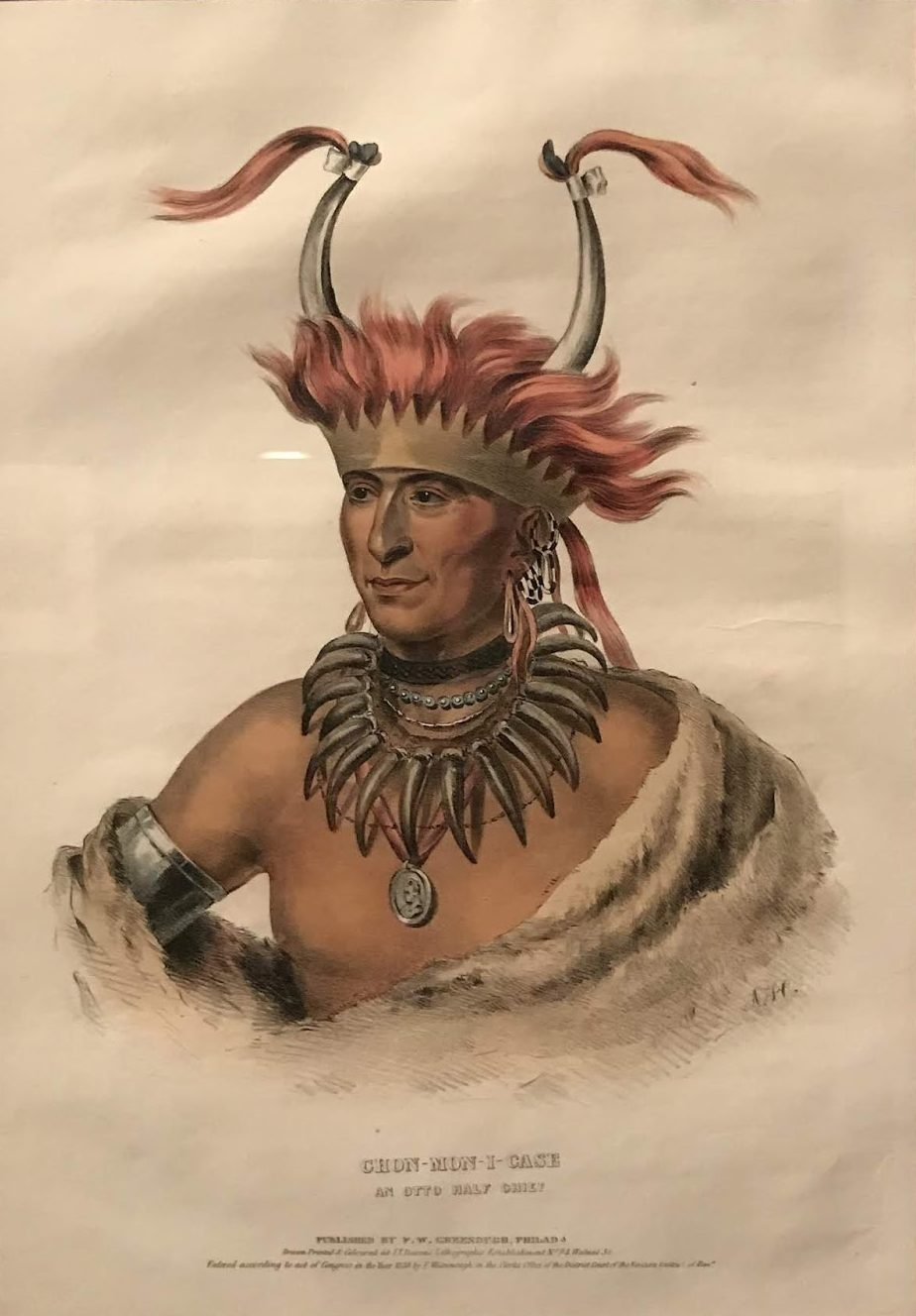

I'm not sure how AI could be possibility racist. (Image is of a supposed Native American but my point still stands)

the AI itself can't be racist but it will propagate biases contained in its training data

Turbans are cool and distinct.

Would they be equally surprised to see a majority of subjects in baggy jeans with chain wallets if they prompted it to generate an image of a teen in the early 2000's? 🤨

This is the best summary I could come up with:

The latest culprit in this area is Meta’s AI chatbot, which, for some reason, really wants to add turbans to any image of an Indian man.

We tried prompts with different professions and settings, including an architect, a politician, a badminton player, an archer, a writer, a painter, a doctor, a teacher, a balloon seller, and a sculptor.

For instance, it constantly generated an image of an old-school Indian house with vibrant colors, wooden columns, and styled roofs.

In the gallery bellow, we have included images with content creator on a beach, a hill, mountain, a zoo, a restaurant, and a shoe store.

In response to questions TechCrunch sent to Meta about training data an biases, the company said it is working on making its generative AI tech better, but didn’t provide much detail about the process.

If you have found AI models generating unusual or biased output, you can reach out to me at im@ivanmehta.com by email and through this link on Signal.

The original article contains 956 words, the summary contains 164 words. Saved 83%. I'm a bot and I'm open source!

Whenever I try, I get Ravi Bhatia screaming "How can she slap?!"